24. Beyond Counting Individual Words: N-grams#

So far in our journey through text data processing, we’ve dealt with counting individual words. While this approach, often referred to as a “bag of words” model, can provide a basic level of understanding and can be useful for certain tasks, it often falls short in capturing the true complexity and richness of language. This is mainly because it treats each word independently and ignores the context and order of words, which are fundamental to human language comprehension.

For example, consider the two phrases

“The movie is good, but the actor was bad.”

and

“The movie is bad, but the actor was good.”

If we simply count individual words, both phrases are identical because they contain the exact same words! However, their meanings are diametrically opposed. The order of words and the context in which they are used are important.

24.1. N-grams#

N-grams are continuous sequences of n items in a given sample of text or speech. In the context of text analysis, an item can be a character, a syllable, or a word, although words are the most commonly used items. The integer n in “n-gram” refers to the number of items in the sequence, so a bigram (or 2-gram) is a sequence of two words, a trigram (3-gram) is a sequence of three words, and so on.

To illustrate, consider the two sentences above.

With 3-grams we could also get the pieces “movie is good”, “movie is bad”, “actor was bad”, and “actor was good”.

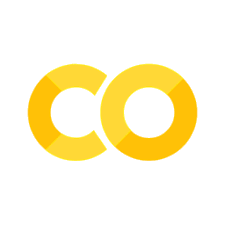

Bigrams (or 2-grams) would not catch those differences. However, they can be helpful in slightly simpler cases, such as “don’t like” versus “do like” (see Fig. 24.1). A lot will depend here on the text normalization and tokenization process. In some cases don't will be interpreted as two tokens (do and not), while other workflows might leave it at don't or don' (for instance the TfidfVectorizer from Sckit-Learn). In the first case, we would again need 3-grams to fully represent the “don’t” like as ("do", "not", "like").

Fig. 24.1 Two example sentences can be compared based on the words they contain (left table). But in many cases, meaningful distinctions get more pronounced when including n-grams (here: 2-grams added ot the right table). Identical words and 2-grams between the sentences are marked by yellow boxes.#

Now we will see how we can make use of such n-grams.

import os

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sb

from sklearn.feature_extraction.text import TfidfVectorizer

# Set the ggplot style

plt.style.use("ggplot")

24.2. N-grams in TF-IDF Vectors#

When creating TF-IDF vectors, we can incorporate the concept of n-grams. The scikit-learn TfidfVectorizer provides the ngram_range parameter that allows us to specify the range of n-grams to include in the feature vectors. Setting this parameter to (1, 3) for instance, meant that 1-grams, 2-grams, and 3-grams will be included. So better be careful to not add too high numbers for ngram_range. Why? Well, let’s see. But we will start by importing a dataset with plenty of text documents in it.

24.2.1. Dataset - Madrid Restaurant Reviews#

We will now use a large, text-based dataset containing more than 176.000 restaurant reviews from Madrid (see dataset on zenodo). The dataset (about 142MB) can be downloaded via the following code block.

In the following, we will work with “only” the first 40,000 entries of this dataset. You can just re-run this code and use the full dataset. Question to you: Does this improve the models?

Show code cell source

"""

This code block downloads the data from zenodo and stores it in a local 'datasets' folder.

"""

import requests

import os

def download_from_zenodo(url, save_path):

"""

Downloads a file from a given Zenodo link and saves it to the specified path.

Parameters:

- url: The Zenodo link to the file to be downloaded.

- save_path: Path where the file should be saved.

"""

# Check if the file already exists

if os.path.exists(save_path):

print(f"File {save_path} already exists. Skipping download.")

return None

response = requests.get(url, stream=True)

response.raise_for_status()

with open(save_path, 'wb') as f:

for chunk in response.iter_content(chunk_size=8192):

f.write(chunk)

print(f"File downloaded successfully and saved to {save_path}")

# Zenodo link to the dataset

zenodo_link = r"https://zenodo.org/records/6583422/files/Madrid_reviews.csv?download=1"

# Path to save the downloaded dataset (you can modify this as needed)

output_path = os.path.join("..", "datasets", "madrid_reviews.csv")

# Create directory if it doesn't exist

os.makedirs(os.path.dirname(output_path), exist_ok=True)

# Download the dataset

download_from_zenodo(zenodo_link, output_path)

File downloaded successfully and saved to ../datasets/madrid_reviews.csv

NUM_REVIEWS = 40_000 # remove this part to re-run the following code on the full dataset!

filename = "../datasets/madrid_reviews.csv"

data = pd.read_csv(filename)

data = data.drop(["Unnamed: 0"], axis=1)

data = data.iloc[-NUM_REVIEWS:, :]

data.head()

| parse_count | restaurant_name | rating_review | sample | review_id | title_review | review_preview | review_full | date | city | url_restaurant | author_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 136848 | 151539 | Calcuta | 2 | Negative | review_154383514 | Friendly fakey | 'Vegetable Korma' was really just a bland yell... | 'Vegetable Korma' was really just a bland yell... | March 12, 2013 | Madrid | https://www.tripadvisor.com/Restaurant_Review-... | UID_83472 |

| 136849 | 151540 | La_Tahona_de_Alburquerque | 5 | Positive | review_560721206 | Great value & home made. | A typical restaurant/bar very popular with loc... | A typical restaurant/bar very popular with loc... | February 15, 2018 | Madrid | https://www.tripadvisor.com/Restaurant_Review-... | UID_35302 |

| 136850 | 151541 | La_Tahona_de_Alburquerque | 3 | Negative | review_178870964 | Great salmon! Big serving. | Though the place looks a bit old and some of t... | Though the place looks a bit old and some of t... | September 27, 2013 | Madrid | https://www.tripadvisor.com/Restaurant_Review-... | UID_83473 |

| 136851 | 151542 | La_Tagliatella_C_C_Principe_Pio_Madrid | 2 | Negative | review_309030259 | Apauling management | My boyfriend and I came here for a birthday lu... | My boyfriend and I came here for a birthday lu... | September 10, 2015 | Madrid | https://www.tripadvisor.com/Restaurant_Review-... | UID_10033 |

| 136852 | 151544 | La_Barraca | 2 | Negative | review_316597709 | Poor Service, paella was not prepared properly... | It is almost impossible to get a bad meal in M... | It is almost impossible to get a bad meal in M... | October 6, 2015 | Madrid | https://www.tripadvisor.com/Restaurant_Review-... | UID_83474 |

data.shape

(40000, 12)

As we can see, we have a pretty extensive dataset with many different restaurant reviews, our documents (review_full), as well as ratings (rating_review). We will use both of them in the following part. Let’s first check a few random examples of our reviews, just to get a first idea of how the data looks like.

data.review_full.iloc[0]

"'Vegetable Korma' was really just a bland yellow curry?frozen peas & carrots, turmeric, milk, maybe a bit of onion. Papadums OK. Others said other dishes were fine. But all lacked the odors of the many spices I've come to expect from India."

24.3. TF-IDF with Bigrams: Growing Vectors and Managing High Dimensionality#

As we did in the previous chapters, we can simply use the Scikit-Learn TfidfVectorizer to create tfidf-vectors of our documents. But now with ngram_range set to more than just 1-grams. For a start, we will use 1-grams and 2-grams:

from sklearn.feature_extraction.text import TfidfVectorizer

# considers both unigrams and bigrams

vectorizer = TfidfVectorizer(ngram_range=(1, 2))

tfidf_vectors = vectorizer.fit_transform(data.review_full)

tfidf_vectors.shape

(40000, 532156)

Look at the size of those vectors!

Even for 1-grams, the tfidf-vectors we saw in the previous chapters were rather large. But with higher n-grams, this can really explode because there are so much more possible combinations of words.

So, clearly, using higher n-grams comes at a cost. The more we increase the size of our n-grams, the higher the dimensionality of our feature vectors. In the case of bigrams, for every pair of words that occur together in our text corpus, we add a new dimension to our feature space. This can quickly lead to an explosion of features. For instance, a modest vocabulary of 1,000 words leads to a potential of up to 1,000,000 (1,000 x 1,000) bigrams.

This high dimensionality can lead to two issues:

Sparsity: Most documents in the corpus will not contain most of the possible bigrams, leading to a feature matrix where most values are zero, i.e., a sparse matrix.

Computational resources: The computational requirement for storing and processing these feature vectors can become significant, especially for large text corpora.

Several techniques can help manage this high-dimensionality problem:

Feature selection: We can limit the number of bigrams we include in our feature vector. This could be done based on the frequency of the bigrams. For example, we could choose to include only those bigrams that occur more than a certain number of times in the corpus.

Dimensionality reduction: Techniques such as Principal Component Analysis (PCA) or Truncated Singular Value Decomposition (TruncatedSVD) can be used to reduce the dimensionality of the feature space, while preserving as much of the variance in the data as possible.

Using Hashing Vectorizer: Scikit-learn provides a

HashingVectorizerthat uses a hash function to map the features to indices in the feature vector. This approach has a constant memory footprint and does not require to keep a vocabulary dictionary in memory, which makes it suitable for large text corpora.

It’s important to weigh the trade-offs between capturing more context using n-grams and managing the resulting high dimensionality.

Let us here use the simplest way to reduce the tfidf vector size: a more restrictive feature selection!

24.3.1. Restrict the Tfidf Vector Sizes#

A very effective parameter for reducing the number of considered n-grams is min_df, the minimum document frequency.

We could increase this to 10, so only n-grams that occur in at least 10 of our documents will be considered for our vectors.

vectorizer = TfidfVectorizer(min_df=10, max_df=0.2,

ngram_range=(1, 2))

tfidf_vectors = vectorizer.fit_transform(data.review_full)

tfidf_vectors.shape

(40000, 36817)

This looks much better! Maybe we can even include 3-grams?

vectorizer = TfidfVectorizer(min_df=5, max_df=0.2,

ngram_range=(1, 3))

tfidf_vectors = vectorizer.fit_transform(data.review_full)

tfidf_vectors.shape

(40000, 124025)

This looks OK, at least size-wise. The reason why this doesn’t explode in terms of vector size is that the min_df parameter also counts for 2-grams, 3-grams etc. This here means that only the 3-grams which occur at least min_df-times will be kept.

Now we should check which ngrams the tfidf model finally included.

vectorizer.get_feature_names_out()[-100:]

array(['your table to', 'your tapa', 'your tapas', 'your tapas and',

'your tapas crawl', 'your taste', 'your taste buds',

'your tastebuds', 'your thing', 'your time', 'your time and',

'your time here', 'your time in', 'your time or',

'your time there', 'your time to', 'your to', 'your to do',

'your tour', 'your trip', 'your trip to', 'your tummy',

'your turn', 'your typical', 'your typical tapas', 'your usual',

'your valuables', 'your visit', 'your visit to', 'your visiting',

'your visiting madrid', 'your waiter', 'your wallet', 'your way',

'your way around', 'your way in', 'your way through',

'your way to', 'your wife', 'your wine', 'your wine and', 'youre',

'yours', 'yourself', 'yourself and', 'yourself at',

'yourself favor', 'yourself favor and', 'yourself favour',

'yourself favour and', 'yourself for', 'yourself in',

'yourself in madrid', 'yourself in the', 'yourself on',

'yourself the', 'yourself to', 'yourselves', 'yr', 'yr old', 'yrs',

'yuck', 'yugo', 'yuk', 'yum', 'yum and', 'yum the', 'yum we',

'yum yum', 'yumm', 'yummy', 'yummy and', 'yummy but', 'yummy food',

'yummy food and', 'yummy if', 'yummy it', 'yummy sangria',

'yummy tapas', 'yummy the', 'yummy too', 'yummy we', 'yummy would',

'yup', 'yuzu', 'zamburiñas', 'zara', 'zaragoza', 'zarra',

'zealand', 'zero', 'zero stars', 'zing', 'zone', 'zones', 'zoo',

'zucchini', 'zucchini and', 'zucchini with', 'ástor'], dtype=object)

Well, that does not always immediately look like very good word combinations. And there are foreign language pieces still in our n-grams. We do see a lot of 2-grams and 3-grams. Most combinations of 2 or 3 words, however, seem to be grammatically wrong.

Why is that?

The reason is that our selection criteria (using min_df and max_df) removed a lot of very common words so that yes it does becomes yes does.

But we can leave it to the machine learning algorithms now to make more sense of it.

First, however, it might be good the reduce the vectors a bit further.

Instead of manually adjusting the min_df many times, we can also use the parameter max_features to set an upper limit. This will remove all n-grams on the lower document frequency size until the set limit is reached.

vectorizer = TfidfVectorizer(min_df=5, max_df=0.2,

ngram_range=(1, 3),

max_features=10_000,

)

tfidf_vectors = vectorizer.fit_transform(data.review_full)

tfidf_vectors.shape

(40000, 10000)

vectorizer.get_feature_names_out()[-100:]

array(['you can', 'you can also', 'you can buy', 'you can choose',

'you can eat', 'you can enjoy', 'you can find', 'you can get',

'you can go', 'you can have', 'you can order', 'you can see',

'you can sit', 'you can try', 'you cannot', 'you choose',

'you come', 'you could', 'you do', 'you do not', 'you don',

'you don want', 'you eat', 'you enjoy', 'you enter', 'you expect',

'you feel', 'you feel like', 'you find', 'you for', 'you get',

'you get to', 'you go', 'you go to', 'you had', 'you have',

'you have the', 'you have to', 'you in', 'you just', 'you know',

'you like', 'you like to', 'you ll', 'you ll be', 'you ll find',

'you love', 'you make', 'you may', 'you might', 'you must',

'you must visit', 'you need', 'you need to', 'you order',

'you pay', 'you pay for', 'you re', 'you re in', 'you re looking',

'you re not', 'you really', 'you see', 'you should', 'you sit',

'you that', 'you the', 'you to', 'you try', 'you ve', 'you visit',

'you visit madrid', 'you walk', 'you want', 'you want to',

'you were', 'you will', 'you will be', 'you will find',

'you will get', 'you will have', 'you will not', 'you won',

'you won be', 'you would', 'you would expect', 'young', 'your',

'your food', 'your meal', 'your money', 'your mouth', 'your order',

'your own', 'your table', 'your time', 'your way', 'yourself',

'yum', 'yummy'], dtype=object)

OK, this looks quite good. Let’s try to work with those settings.

First, we do a data split to later train a machine learning model:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(

data.review_full, data.rating_review, test_size=0.2, random_state=0)

print(f"Train dataset size: {X_train.shape}")

print(f"Test dataset size: {X_test.shape}")

Train dataset size: (32000,)

Test dataset size: (8000,)

This time we will start right away with a classification model:

24.3.2. Logistic Regression model#

To later compare models, we will start without n-grams!

And, important: We have to set the tfidf-vectorizer on only the training data!

vectorizer = TfidfVectorizer(min_df=10, max_df=0.2,

max_features=10000,

#ngram_range=(1, 3)

)

tfidf_vectors = vectorizer.fit_transform(X_train)

tfidf_vectors.shape

(32000, 5919)

vectorizer.get_feature_names_out()[-100:]

array(['widely', 'wider', 'wife', 'wifi', 'wild', 'will', 'willing',

'win', 'wind', 'window', 'windows', 'wine', 'wines', 'wings',

'winner', 'winning', 'winter', 'wiped', 'wise', 'wisely', 'wish',

'wished', 'wishes', 'within', 'without', 'witnessed', 'wok',

'woman', 'women', 'won', 'wonder', 'wondered', 'wonderful',

'wonderfull', 'wonderfully', 'wondering', 'wont', 'wood', 'wooden',

'word', 'words', 'wore', 'work', 'worked', 'workers', 'working',

'works', 'world', 'worlds', 'worn', 'worried', 'worries', 'worry',

'worse', 'worst', 'worth', 'worths', 'worthwhile', 'worthy',

'would', 'wouldn', 'wouldnt', 'wow', 'wrap', 'wrapped', 'wraps',

'write', 'writers', 'writing', 'written', 'wrong', 'wrote', 'xo',

'xx', 'yamil', 'yeah', 'year', 'years', 'yelled', 'yelling',

'yellow', 'yes', 'yesterday', 'yet', 'yo', 'yoghurt', 'yogurt',

'york', 'young', 'younger', 'your', 'youre', 'yourself',

'yourselves', 'yr', 'yum', 'yummy', 'zero', 'zone', 'zucchini'],

dtype=object)

By the way: Why did we now get less than max_features?

from sklearn.linear_model import LogisticRegression

model = LogisticRegression(max_iter=300) # don't worry it also works without setting max_iter

model.fit(tfidf_vectors, y_train)

LogisticRegression(max_iter=300)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| penalty | 'l2' | |

| dual | False | |

| tol | 0.0001 | |

| C | 1.0 | |

| fit_intercept | True | |

| intercept_scaling | 1 | |

| class_weight | None | |

| random_state | None | |

| solver | 'lbfgs' | |

| max_iter | 300 | |

| multi_class | 'deprecated' | |

| verbose | 0 | |

| warm_start | False | |

| n_jobs | None | |

| l1_ratio | None |

We will then use the before initialized tfidf-vectorizer to process our test data.

tfidf_vectors_test = vectorizer.transform(X_test)

predictions = model.predict(tfidf_vectors_test)

np.round(predictions[:20], 1)

array([5, 3, 4, 1, 5, 5, 1, 5, 4, 5, 4, 5, 5, 4, 5, 4, 5, 1, 5, 5])

y_test[:20].values

array([4, 3, 3, 1, 4, 4, 1, 4, 4, 5, 5, 4, 5, 5, 5, 3, 1, 1, 4, 5])

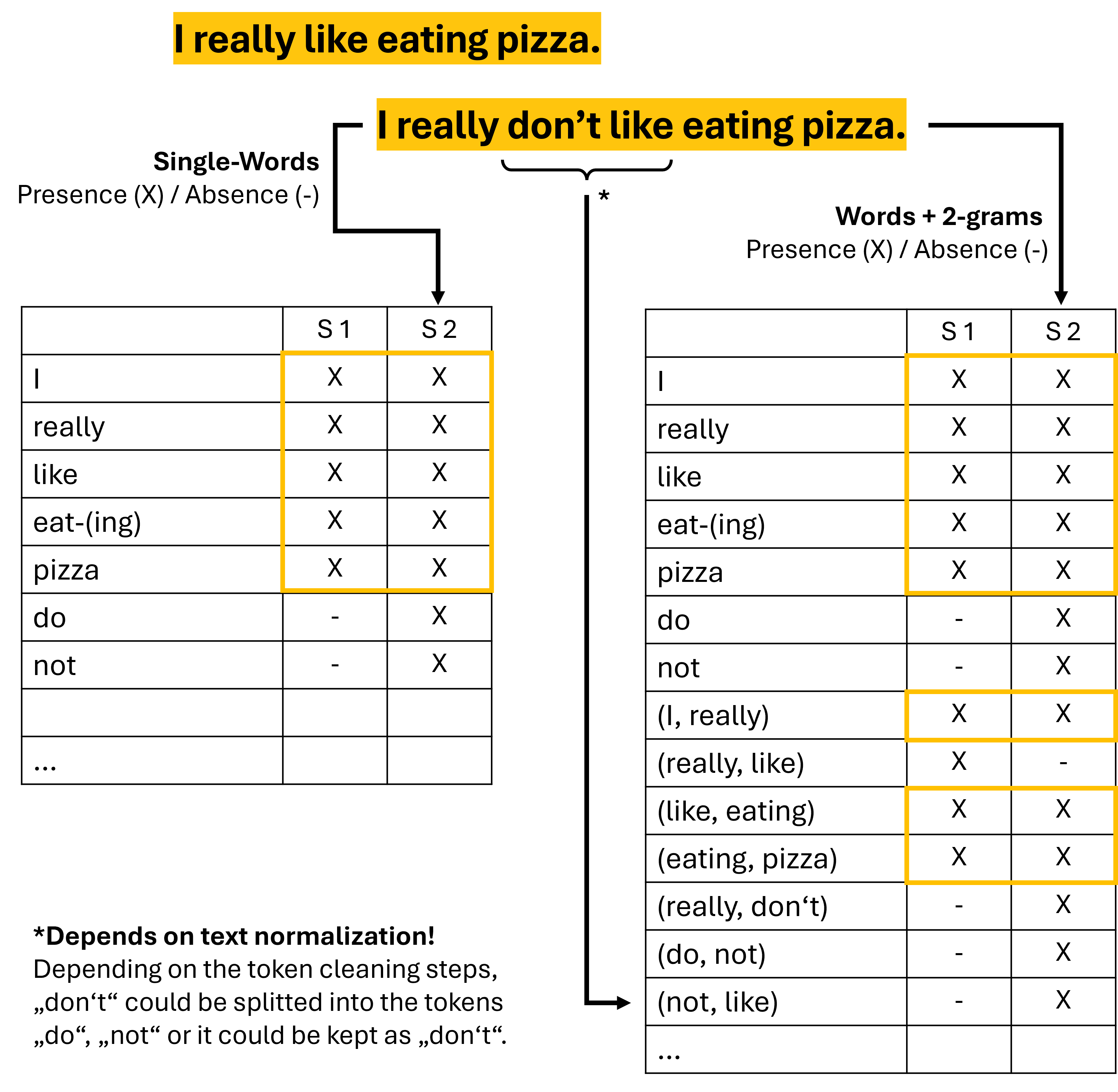

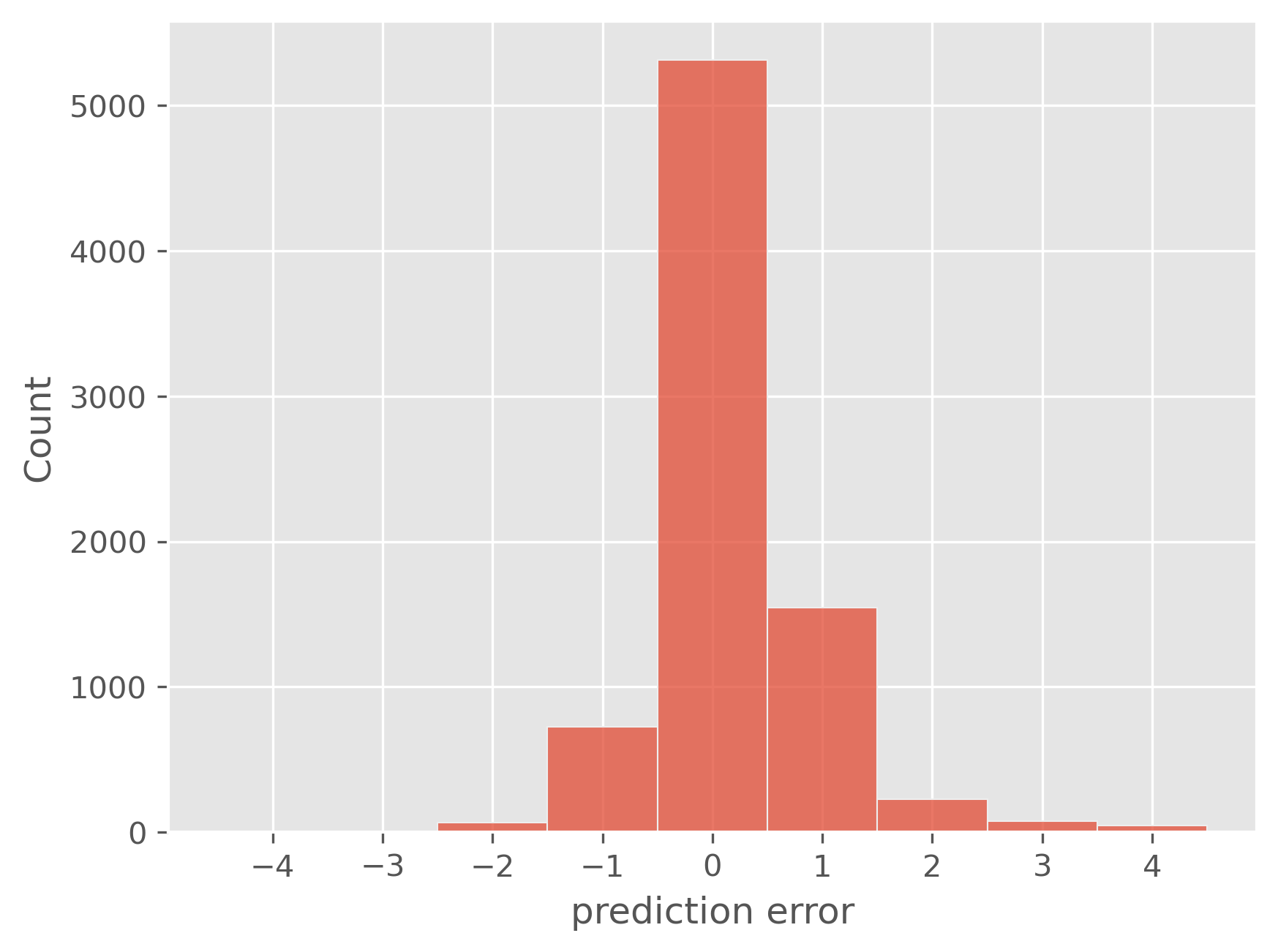

bins = np.arange(-4.5, 5.5, 1)

plt.figure(dpi=300)

sb.histplot(predictions - y_test, bins=bins)

plt.xlabel("prediction error")

plt.xticks(range(-4, 5)) # Set x-ticks to be all integers between -4 and 4

plt.show()

print(f"Mean absolute error (MAE): {np.abs(predictions - y_test).mean():.4f}")

Mean absolute error (MAE): 0.4320

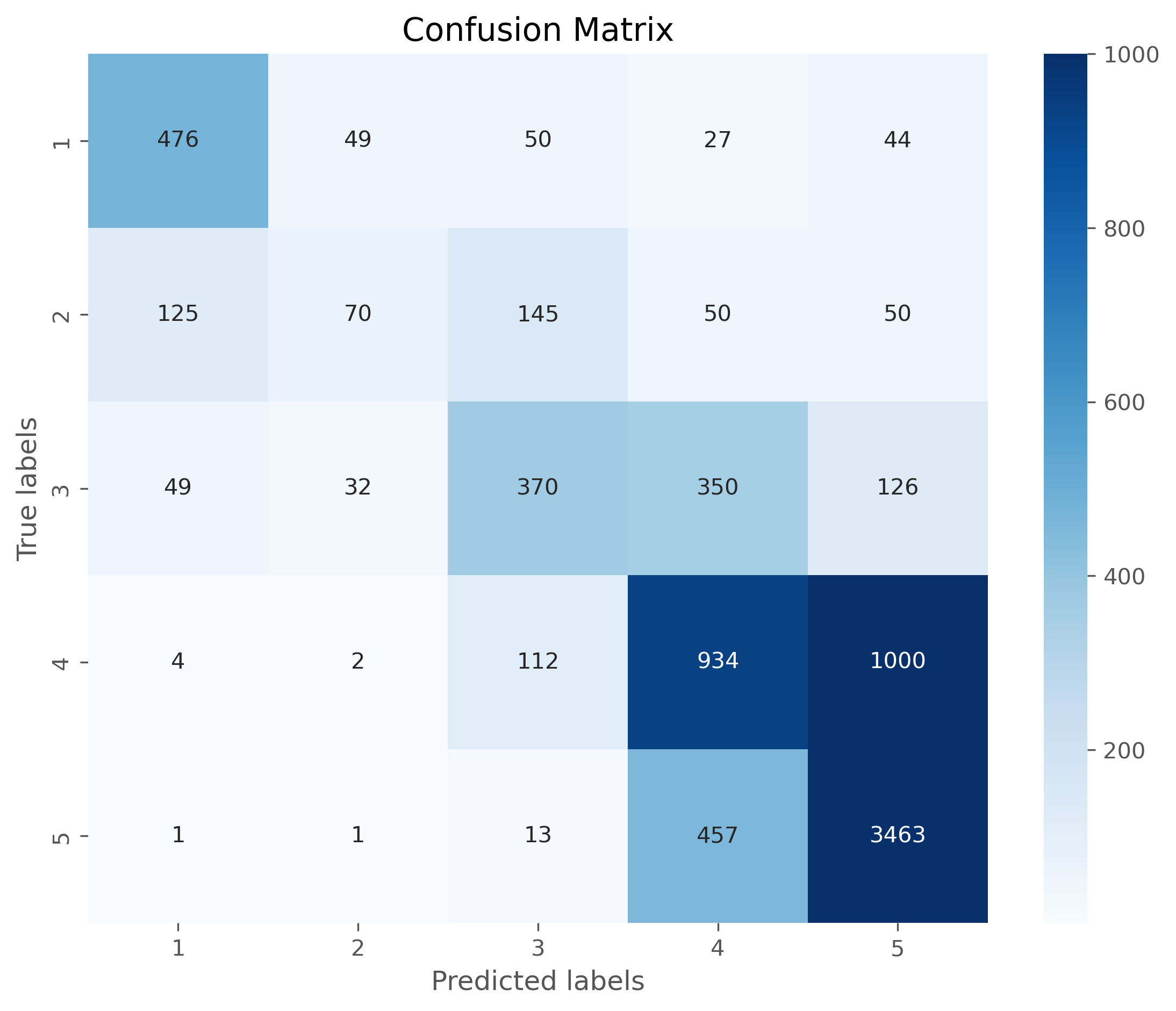

from sklearn.metrics import confusion_matrix, classification_report

cm = confusion_matrix(y_test, predictions, labels=model.classes_)

# Plotting the confusion matrix with a heatmap

plt.figure(figsize=(9,7), dpi=300)

sb.heatmap(cm, annot=True, fmt='d',

cmap='Blues',

xticklabels=model.classes_,

yticklabels=model.classes_,

vmax=1000,

)

plt.xlabel('Predicted labels')

plt.ylabel('True labels')

plt.title('Confusion Matrix')

plt.show()

24.3.2.1. Look at the Vectors#

How do our vectors look like? Luckily, they are stored as sparse arrays so that only the (few) non-zero elements are actually being kept in memory. Often, our document tfidf-vectors will only contain a tiny fraction of all included n-grams:

tfidf_vectors[0, :].data

array([0.20140989, 0.37750596, 0.22927572, 0.32327298, 0.26211929,

0.35615086, 0.19799178, 0.19654302, 0.21729833, 0.18328515,

0.24375703, 0.22733288, 0.2354731 , 0.37297901])

tfidf_vectors[0, :].indices

array([5721, 1603, 2619, 3070, 2658, 203, 1161, 1948, 499, 4137, 1045,

305, 3471, 2637], dtype=int32)

And? What has our model actually learned?

example_vector = pd.DataFrame({

"word": vectorizer.get_feature_names_out()[tfidf_vectors[0, :].indices],

"tfidf": tfidf_vectors[0, :].data

})

example_vector

| word | tfidf | |

|---|---|---|

| 0 | want | 0.201410 |

| 1 | discover | 0.377506 |

| 2 | how | 0.229276 |

| 3 | lived | 0.323273 |

| 4 | ice | 0.262119 |

| 5 | ages | 0.356151 |

| 6 | come | 0.197992 |

| 7 | expensive | 0.196543 |

| 8 | bad | 0.217298 |

| 9 | quality | 0.183285 |

| 10 | choose | 0.243757 |

| 11 | another | 0.227333 |

| 12 | near | 0.235473 |

| 13 | hundreds | 0.372979 |

24.3.3. Logistic Regression model + n-grams#

Let us now re-run the same thing, but use n-grams.

vectorizer = TfidfVectorizer(min_df=10, max_df=0.2,

max_features=10000,

ngram_range=(1, 3)

)

tfidf_vectors = vectorizer.fit_transform(X_train)

tfidf_vectors.shape

(32000, 10000)

vectorizer.get_feature_names_out()[-100:]

array(['you can also', 'you can buy', 'you can choose', 'you can eat',

'you can enjoy', 'you can find', 'you can get', 'you can go',

'you can have', 'you can order', 'you can see', 'you can sit',

'you can try', 'you cannot', 'you choose', 'you come', 'you could',

'you do', 'you do not', 'you don', 'you don have', 'you don want',

'you eat', 'you enjoy', 'you enter', 'you expect', 'you feel',

'you feel like', 'you find', 'you for', 'you get', 'you get to',

'you go', 'you go to', 'you had', 'you have', 'you have the',

'you have to', 'you in', 'you just', 'you know', 'you like',

'you ll', 'you ll be', 'you ll have', 'you love', 'you make',

'you may', 'you might', 'you must', 'you must visit', 'you need',

'you need to', 'you order', 'you pay', 'you pay for', 'you re',

'you re in', 'you re looking', 'you re not', 'you really',

'you see', 'you should', 'you sit', 'you that', 'you the',

'you to', 'you try', 'you ve', 'you visit', 'you visit madrid',

'you walk', 'you want', 'you want to', 'you were', 'you will',

'you will be', 'you will find', 'you will get', 'you will have',

'you will not', 'you won', 'you won be', 'you would',

'you would expect', 'young', 'your', 'your food', 'your meal',

'your money', 'your mouth', 'your order', 'your own', 'your table',

'your time', 'your way', 'yourself', 'yum', 'yummy', 'zero'],

dtype=object)

from sklearn.linear_model import LogisticRegression

model = LogisticRegression(max_iter=300) # don't worry it also works without setting max_iter

model.fit(tfidf_vectors, y_train)

LogisticRegression(max_iter=300)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| penalty | 'l2' | |

| dual | False | |

| tol | 0.0001 | |

| C | 1.0 | |

| fit_intercept | True | |

| intercept_scaling | 1 | |

| class_weight | None | |

| random_state | None | |

| solver | 'lbfgs' | |

| max_iter | 300 | |

| multi_class | 'deprecated' | |

| verbose | 0 | |

| warm_start | False | |

| n_jobs | None | |

| l1_ratio | None |

tfidf_vectors_test = vectorizer.transform(X_test)

predictions = model.predict(tfidf_vectors_test)

np.round(predictions[:20], 1)

array([5, 4, 4, 1, 4, 5, 1, 4, 5, 5, 4, 5, 5, 4, 4, 4, 4, 1, 5, 5])

y_test[:20].values

array([4, 3, 3, 1, 4, 4, 1, 4, 4, 5, 5, 4, 5, 5, 5, 3, 1, 1, 4, 5])

bins = np.arange(-4.5, 5.5, 1)

plt.figure(dpi=300)

sb.histplot(predictions - y_test, bins=bins)

plt.xlabel("prediction error")

plt.xticks(range(-4, 5))

plt.show()

print(f"Mean absolute error (MAE): {np.abs(predictions - y_test).mean():.4f}")

Mean absolute error (MAE): 0.4095

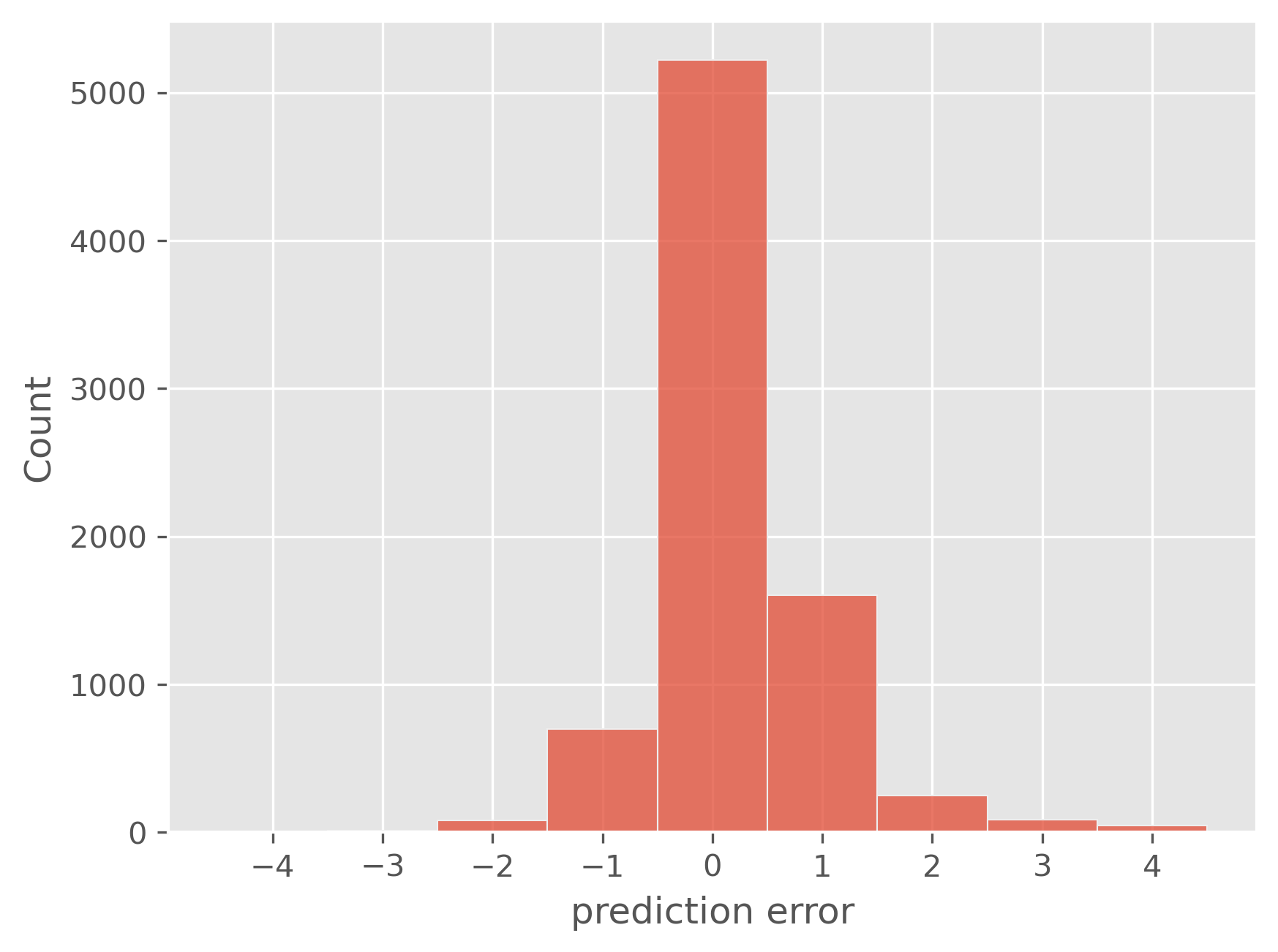

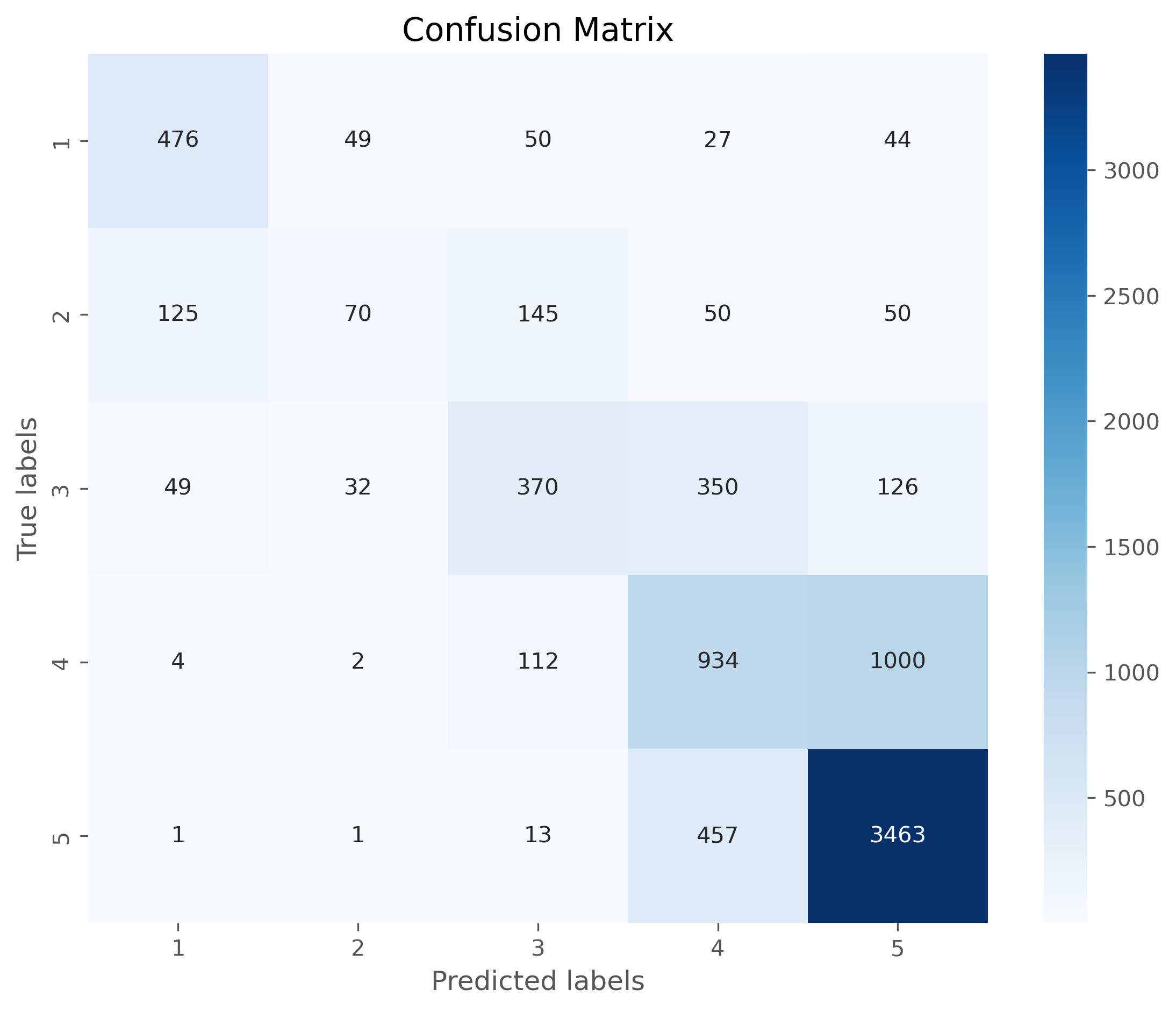

from sklearn.metrics import confusion_matrix, classification_report

cm = confusion_matrix(y_test, predictions, labels=model.classes_)

# Plotting the confusion matrix with a heatmap

plt.figure(figsize=(9,7), dpi=300)

sb.heatmap(cm, annot=True, fmt='d',

cmap='Blues',

xticklabels=model.classes_,

yticklabels=model.classes_,

vmax=1000

)

plt.xlabel('Predicted labels')

plt.ylabel('True labels')

plt.title('Confusion Matrix')

plt.show()

24.3.4. Did the 2-grams and 3-grams help?#

Well, the prediction accuracy only got slightly better. So, it seems to have some effect, but nothing spectacular. However, this is not a general finding and might look very differently for other datasets or problems.

We can now also look at the ngrams that have the largest impact on the model predictions:

ngrams = pd.DataFrame({"ngram": vectorizer.get_feature_names_out(),

"weight": model.coef_[0]

})

ngrams.sort_values("weight")

| ngram | weight | |

|---|---|---|

| 2033 | delicious | -3.217266 |

| 8734 | very good | -2.819554 |

| 2488 | excellent | -2.813513 |

| 7225 | tasty | -2.445878 |

| 1296 | bit | -2.292688 |

| ... | ... | ... |

| 1037 | avoid | 4.340851 |

| 6375 | rude | 4.344348 |

| 1082 | bad | 4.964864 |

| 9822 | worst | 5.030162 |

| 7252 | terrible | 5.198820 |

10000 rows × 2 columns

Here, too, we find only very few 2-grams in the top-20 and bottom-20 lists. Most of the times, the model still seems to judge the reviews based on individual words.

ngrams.sort_values("weight").head(20)

| ngram | weight | |

|---|---|---|

| 2033 | delicious | -3.217266 |

| 8734 | very good | -2.819554 |

| 2488 | excellent | -2.813513 |

| 7225 | tasty | -2.445878 |

| 1296 | bit | -2.292688 |

| 3002 | friendly | -2.284883 |

| 989 | atmosphere | -2.203932 |

| 5035 | nice | -1.972904 |

| 7364 | the best | -1.954229 |

| 7174 | tapas | -1.952985 |

| 1240 | best | -1.938627 |

| 8992 | was good | -1.923603 |

| 254 | amazing | -1.884184 |

| 6098 | quite | -1.818930 |

| 2382 | enjoyed | -1.815985 |

| 8527 | try | -1.789163 |

| 1936 | crowded | -1.717156 |

| 9656 | wine | -1.665158 |

| 5765 | perfect | -1.664695 |

| 8996 | was great | -1.581432 |

from sklearn.metrics import confusion_matrix, classification_report

print(confusion_matrix(y_test, predictions))

print(classification_report(y_test, predictions))

[[ 476 49 50 27 44]

[ 125 70 145 50 50]

[ 49 32 370 350 126]

[ 4 2 112 934 1000]

[ 1 1 13 457 3463]]

precision recall f1-score support

1 0.73 0.74 0.73 646

2 0.45 0.16 0.24 440

3 0.54 0.40 0.46 927

4 0.51 0.46 0.48 2052

5 0.74 0.88 0.80 3935

accuracy 0.66 8000

macro avg 0.59 0.53 0.54 8000

weighted avg 0.64 0.66 0.64 8000

24.3.5. Confusion matrix#

The confusion matrix can tell us a lot about where the model works well and where it fails. Often is is more accessible if the matrix is plotted, for instance using seaborns heatmap.

cm = confusion_matrix(y_test, predictions, labels=model.classes_)

# Plotting the confusion matrix with a heatmap

plt.figure(figsize=(9,7), dpi=300)

sb.heatmap(cm, annot=True, fmt='d',

cmap='Blues',

xticklabels=model.classes_,

yticklabels=model.classes_)

plt.xlabel('Predicted labels')

plt.ylabel('True labels')

plt.title('Confusion Matrix')

plt.show()

24.4. Find similar documents with tfidf#

So far, we used the tfidf-vectors as feature vectors to train machine learning models. As we just saw, this works very well to predict review rating or to classify documents as positive/negative (=sentiment analysis).

But there is more we can do with tfidf vectors. Why not use the vectors to compute distances or similarities? This way, we can search for the most similar documents in a corpus!

vectorizer = TfidfVectorizer(min_df=10, max_df=0.2,

max_features=25000,

ngram_range=(1, 3))

tfidf_vectors = vectorizer.fit_transform(X_train)

tfidf_vectors.shape

(32000, 25000)

tfidf_vectors.shape

(32000, 25000)

X_train.shape

(32000,)

24.4.1. Compare one vector to all other vectors#

Even though we here deal with very large vectors, computing similarities or angles between these vectors is compuationally very efficient. This means, we can simply compare a the tfidf vector of a given text to all > 140,000 documents in virtually no time!

In order for this to work, however, we should not rely on for-loops. Those are inherently slow in Python. We rather use optimized functions for this such as from sklear.metrics.pairwise.

from sklearn.metrics.pairwise import cosine_similarity

review_id = -11#-9#-2

query_vector = tfidf_vectors[review_id, :]

cosine_similarities = cosine_similarity(query_vector, tfidf_vectors).flatten()

cosine_similarities.shape

(32000,)

np.sort(cosine_similarities)[::-1]

array([1. , 0.17378527, 0.14885286, ..., 0. , 0. ,

0. ])

np.argsort(cosine_similarities)[::-1]

array([31989, 20283, 29369, ..., 11293, 23850, 0])

top5_idx = np.argsort(cosine_similarities)[::-1][1:6]

top5_idx

array([20283, 29369, 29235, 11133, 4271])

Let us now look at the results of our search by displaying the top-5 most similar documents (according to the cosine score on the tfidf-vectors). This usually doesn’t work perfectly, but it does work to quite some extent. Try it out yourself and have a look at what documents this finds for you!

print("\n****Original document:****")

print(X_train.iloc[review_id])

for i in top5_idx:

print(f"\n----Document with similarity {cosine_similarities[i]:.3f}:----")

print(X_train.iloc[i])

****Original document:****

Can get buckets of beers for between 4 -5 Euros (5 beers in a bucket). Offer cheap sandwitches, rations of things such as calamari (which I had and was great). They have a terrace and a large screen TV for the football if that floats your boat.

----Document with similarity 0.174:----

Great place to call in before or after the football at Rel Madrid.Good selection of beers and reasonable prices.

----Document with similarity 0.149:----

This place located next to Moncloa station is a cheap option to get beers for a cheap price and tapas. They have tvs broadcasting football games as well.

----Document with similarity 0.147:----

excellent "ensaladilla rusa" and the "ventresca salad" was delicious too. Big cold Heineken pints and a large selection of beers. Will be back when I come back to Madrid

----Document with similarity 0.139:----

Great local bar for breakfast and delicious snacks. Good variety of beers with friendly attentive staff. It’s always busy with local clientele having a meal or watching the football. Pets are welcome too!

----Document with similarity 0.132:----

Mc donalds for tapas. Only 2 types of beers on tap. The service is fast. Location is convenient. Each tapa between 3 to 9 EUR

24.5. Word Vectors: Word2Vec and Co#

Tfidf vectors are a rather basic, but still often used, technique. Arguably, this is because they are based on relatively simple statistics and easy to compute. They typically do a good job in weighing words according to their importance in a larger corpus and allow us to ignore words with low distriminative power (for instance so-called stopwords such as “a”, “the”, “that”, …).

With n-grams we can even go one step further and also count sentence pieces longer than one word. With n-grams our models can identify important word combinations such as negations (“do not like”), comparatives, or specific expressions (“the best”) into account. The price, however, is that we have to restrict the number of n-grams to avoid exploding vector sizes.

TF-IDF vectors and n-grams serve as powerful techniques to represent and manipulate text data, but they have limitations. These methods treat words, or tiny groups of words, as individual, isolated units, devoid of any context or relation to other words. In other words, they cannot capture the semantic meanings of words and the linguistic context in which they are used.

Take these two sentences as an example:

(1) The customer likes cake with a cappuccino.

(2) The client loves to have a cookie and a coffee.

We will immediately identify that both sentences speak of very similar things. But if you look at the words in both sentences you will realize that only “The” and “a” are found in both. And, as we have seen in the tfidf-part, such words tell very little about the sentence content. All other words, however, only occur in one or the other sentence. Tfidf-vectors would compute a zero similarity here.

This is where we come to word vectors. Word vectors, also known as word embeddings, are mathematical representations of words in a high-dimensional space where the semantic similarity between words corresponds to the geometric distance in the embedding space. Simply put, similar words are close together, and dissimilar words are farther apart. If done well, this should show that “cookie” and “cake” are not the same word, but mean something very related.

The most prominent example of such a technique is Word2Vec [Mikolov et al., 2013][Mikolov et al., 2013].

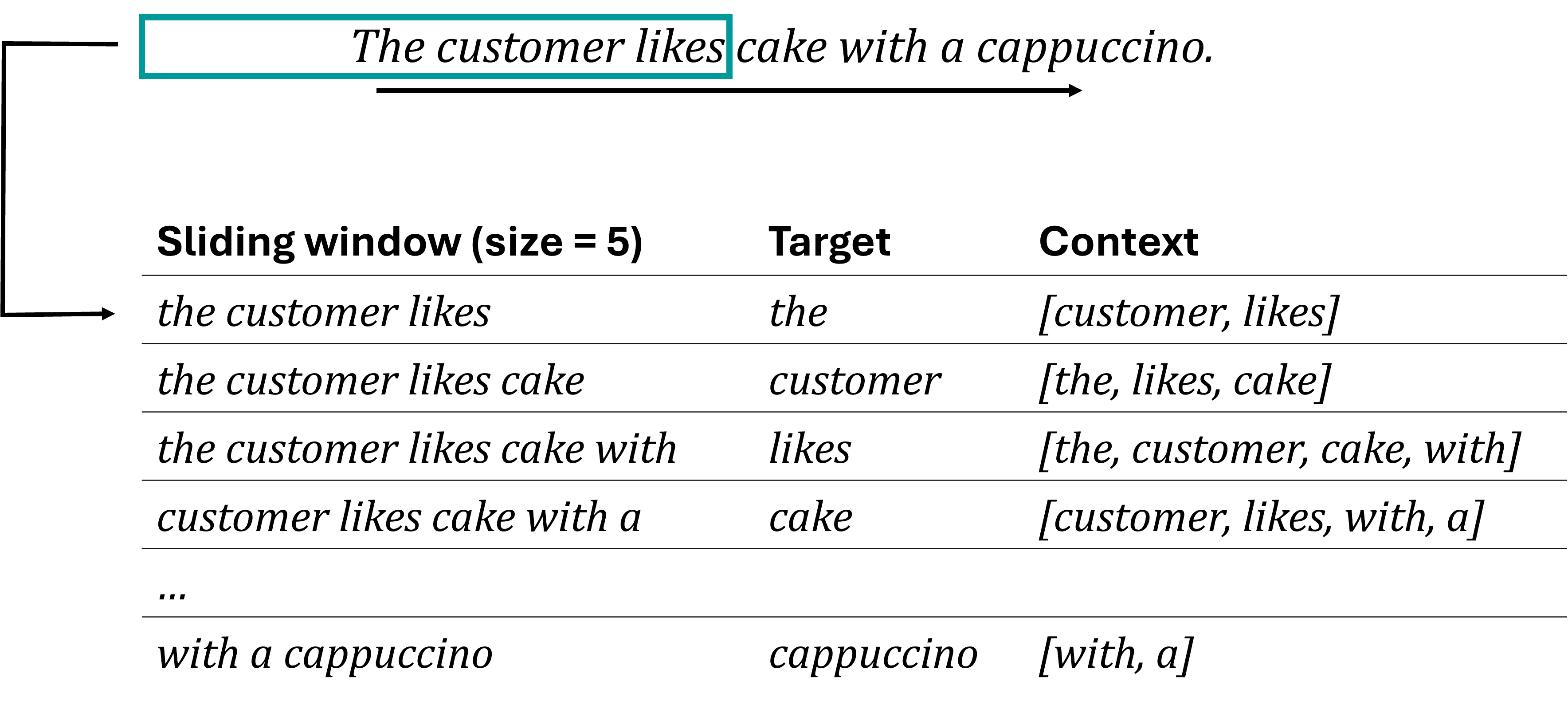

24.5.1. Word2Vec#

The fundamental idea behind Word2Vec is to use the context in which words appear to learn their meanings. As shown in the Fig. 24.2, a sliding window of a fixed size (here, 5) moves over the sentence

“The customer likes cake with a cappuccino.”

At each position, the algorithm selects the central word as target treats the remaining words in the window as its context. For example, in the phrase “the customer likes,” the target word is “the,” and the context words are “customer” and “likes.” This process is repeated for each possible position in the sentence.

These word-context pairs are fed into the Word2Vec model, which learns to map each word to a unique vector in such a way that words appearing in similar contexts have similar vectors. This vector representation captures semantic similarities, meaning that words with similar meanings or usages are positioned closer together in the vector space. Word2Vec thereby enables various applications such as sentiment analysis, machine translation, and recommendation systems by providing a mathematical representation of words that reflects their meanings and relationships.

Fig. 24.2 Techniques such as Word2Vec learn vector representations of individual words based on their “context”, which is given by the neighboring words.#

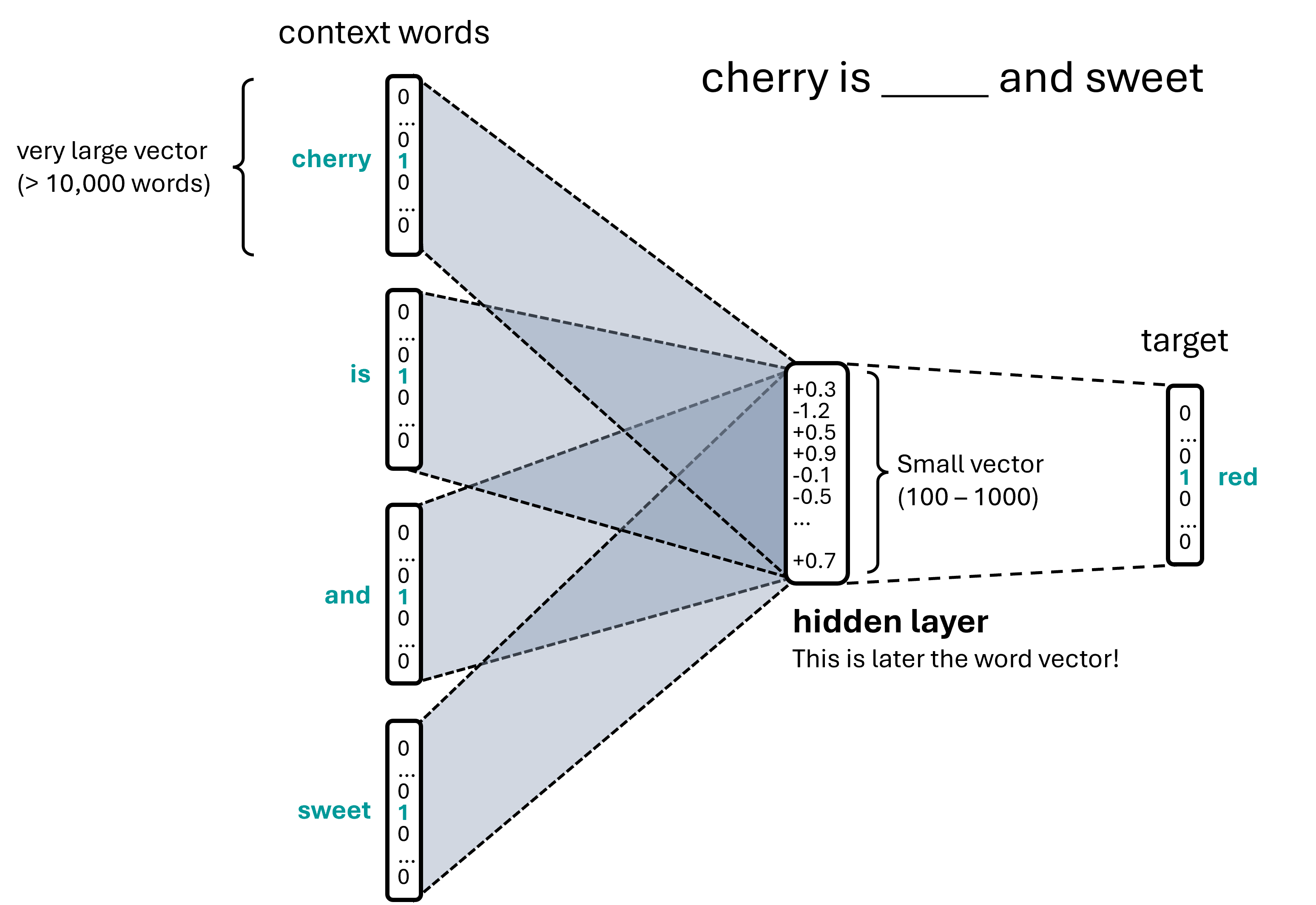

Word2Vec models can be trained using two main methods: Continuous Bag of Words (CBOW) and Skip-Gram. In CBOW, the model predicts a target word based on its surrounding context words, focusing on understanding the word’s context to infer its meaning (see Fig. 24.3). Conversely, the Skip-Gram model predicts the surrounding context words given a target word, emphasizing the ability to generate context from a single word [Mikolov et al., 2013][Mikolov et al., 2013].

Fig. 24.3 The aim of a Word2Vec model is typically to learn vector representations of individual words based on some context words. This is done in such a way that the very large (and very sparse) input vectors are converted into highly compressed float vectors. In this example figure, the context words are “cherry”, “is”, “and”, “sweet”, and the target word would be “red”.#

import nltk

tokenizer = nltk.tokenize.TreebankWordTokenizer()

stemmer = nltk.stem.WordNetLemmatizer()

def process_document(doc):

"""Convert document to lemmas."""

tokens = tokenizer.tokenize(doc)

tokens = [x.strip(".,;:!? ") for x in tokens]

return [stemmer.lemmatize(w) for w in tokens]

The entire text will be divided into sentences, that will be our “documents” in Word2vec terms. To reduce the computation time we will only use a fraction of the sentences, but feel free to repeat the following code parts with all sentences.

from tqdm.notebook import tqdm

sentences = [process_document(doc) for doc in tqdm(X_train.values[:50_000])]

len(sentences)

32000

We will now train our own Word2Vec model using Gensim, see also documentation.

from gensim.models import Word2Vec

# Assume 'sentences' is a list of lists of tokenized sentences

model = Word2Vec(sentences,

vector_size=200,

window=5,

min_count=2,

workers=4)

In particular when we work with very large text corpora, training models such as Word2Vec can be time consuming. Usually, we therefore want to save the trained models for later re-use.

model.save("word2vec_madrid_reviews.model")

vector = model.wv['delicious'] # get numpy vector of a word

# just to get an idea how these vectors look like

vector[:20]

array([-5.7436562e-01, -8.1009543e-01, -4.7739752e-02, -6.7544931e-01,

-5.5543679e-01, 1.5032168e-01, 1.0333453e+00, -1.7149247e-01,

-1.3441066e+00, -3.6548847e-01, -7.5880289e-02, 1.0271485e+00,

-4.0920833e-01, 1.2779517e-01, 8.7921071e-01, 1.4663085e+00,

1.2579725e+00, 2.7423682e-02, -1.1592590e+00, 1.2713424e-03],

dtype=float32)

Let’s now have a look at what work similarities we can get from the Word2Vec model that we just trained on the above sentences.

model.wv.most_similar('delicious', topn=10)

[('yummy', 0.8235727548599243),

('fantastic', 0.8051607012748718),

('tasty', 0.8001575469970703),

('amazing', 0.7819756269454956),

('superb', 0.7730624079704285),

('incredible', 0.7665113210678101),

('fabulous', 0.7665103673934937),

('excellent', 0.7328015565872192),

('awesome', 0.726265549659729),

('phenomenal', 0.7229892611503601)]

model.wv.most_similar('pizza', topn=10)

[('burger', 0.8056879043579102),

('hamburger', 0.7499903440475464),

('paella', 0.7177608013153076),

('pasta', 0.7043079137802124),

('Paella', 0.6906887292861938),

('sushi', 0.6739290356636047),

('ramen', 0.668620765209198),

('topping', 0.6606115102767944),

('sandwich', 0.6537984609603882),

('salad', 0.6531827449798584)]

model.wv.most_similar('horrible', topn=10)

[('awful', 0.8925988674163818),

('terrible', 0.8796250224113464),

('okay', 0.7722311019897461),

('alright', 0.757175087928772),

('exceptional', 0.7494552135467529),

('disgusting', 0.7449309825897217),

('OK', 0.7344291806221008),

('outstanding', 0.7317622303962708),

('appalling', 0.7290202975273132),

('bad', 0.7101510763168335)]

model.wv.most_similar('friendly', topn=10)

[('attentive', 0.8244611024856567),

('welcoming', 0.8047420382499695),

('polite', 0.7995386123657227),

('helpful', 0.7849826216697693),

('courteous', 0.7845814824104309),

('professional', 0.7826632857322693),

('efficient', 0.7396659255027771),

('accommodating', 0.6965122222900391),

('unfriendly', 0.671410083770752),

('pleasant', 0.6668583154678345)]

model.wv.most_similar('chocolate', topn=10)

[('cake', 0.9106695652008057),

('yogurt', 0.8658506274223328),

('churros', 0.8528772592544556),

('lemon', 0.8499927520751953),

('mango', 0.8483800292015076),

('sorbet', 0.8430318832397461),

('strawberry', 0.8354039788246155),

('cream', 0.8277785181999207),

('almond', 0.8238317370414734),

('apple', 0.8219076991081238)]

model.wv.most_similar('coffee', topn=10)

[('beer', 0.7303661704063416),

('snack', 0.72862708568573),

('churros', 0.7169076800346375),

('tea', 0.7130603194236755),

('juice', 0.7117189764976501),

('croissant', 0.6949892640113831),

('refill', 0.6872206330299377),

('dessert', 0.6728480458259583),

('desert', 0.6716121435165405),

('chocolate', 0.6683824062347412)]

24.6. Alternative short-cuts#

Training your own Word2Vec model is fun and sometimes also really helpful. Here it is quite OK for instance, because we have a relatively big text corpus (> 140,000 documents) with a clear general topic focus on restaurants and food.

Often, however, you simply may want to use a model that covers a language more broadly. Instead of training your own model on a much bigger corpus, we can simply use a model that was trained already, see for instance here on the Gensim website.

Another way is to use SpaCy. Its larger language models already contain word embeddings!

# Comment out and run the following to first download a large english model

#!python -m spacy download en_core_web_lg

import spacy

nlp = spacy.load("en_core_web_lg")

---------------------------------------------------------------------------

OSError Traceback (most recent call last)

Cell In[60], line 3

1 import spacy

----> 3 nlp = spacy.load("en_core_web_lg")

File ~/micromamba/envs/data_science/lib/python3.12/site-packages/spacy/__init__.py:51, in load(name, vocab, disable, enable, exclude, config)

27 def load(

28 name: Union[str, Path],

29 *,

(...) 34 config: Union[Dict[str, Any], Config] = util.SimpleFrozenDict(),

35 ) -> Language:

36 """Load a spaCy model from an installed package or a local path.

37

38 name (str): Package name or model path.

(...) 49 RETURNS (Language): The loaded nlp object.

50 """

---> 51 return util.load_model(

52 name,

53 vocab=vocab,

54 disable=disable,

55 enable=enable,

56 exclude=exclude,

57 config=config,

58 )

File ~/micromamba/envs/data_science/lib/python3.12/site-packages/spacy/util.py:472, in load_model(name, vocab, disable, enable, exclude, config)

470 if name in OLD_MODEL_SHORTCUTS:

471 raise IOError(Errors.E941.format(name=name, full=OLD_MODEL_SHORTCUTS[name])) # type: ignore[index]

--> 472 raise IOError(Errors.E050.format(name=name))

OSError: [E050] Can't find model 'en_core_web_lg'. It doesn't seem to be a Python package or a valid path to a data directory.

As we have seen before, SpaCy converts the text into tokens, but also does much more. We can look at different attributes of the tokens to extract the computed information. For instance:

.text: The original token text.has_vector: Does the token have a vector representation?.vector_norm: The L2 norm of the token’s vector (the square root of the sum of the values squared).is_oov: Out-of-vocabulary

tokens = nlp("dog cat banana afskfsd")

for token in tokens:

print(token.text, token.has_vector, token.vector_norm, token.is_oov)

dog True 75.254234 False

cat True 63.188496 False

banana True 31.620354 False

afskfsd False 0.0 True

tokens[0].vector

array([ 1.2330e+00, 4.2963e+00, -7.9738e+00, -1.0121e+01, 1.8207e+00,

1.4098e+00, -4.5180e+00, -5.2261e+00, -2.9157e-01, 9.5234e-01,

6.9880e+00, 5.0637e+00, -5.5726e-03, 3.3395e+00, 6.4596e+00,

-6.3742e+00, 3.9045e-02, -3.9855e+00, 1.2085e+00, -1.3186e+00,

-4.8886e+00, 3.7066e+00, -2.8281e+00, -3.5447e+00, 7.6888e-01,

1.5016e+00, -4.3632e+00, 8.6480e+00, -5.9286e+00, -1.3055e+00,

8.3870e-01, 9.0137e-01, -1.7843e+00, -1.0148e+00, 2.7300e+00,

-6.9039e+00, 8.0413e-01, 7.4880e+00, 6.1078e+00, -4.2130e+00,

-1.5384e-01, -5.4995e+00, 1.0896e+01, 3.9278e+00, -1.3601e-01,

7.7732e-02, 3.2218e+00, -5.8777e+00, 6.1359e-01, -2.4287e+00,

6.2820e+00, 1.3461e+01, 4.3236e+00, 2.4266e+00, -2.6512e+00,

1.1577e+00, 5.0848e+00, -1.7058e+00, 3.3824e+00, 3.2850e+00,

1.0969e+00, -8.3711e+00, -1.5554e+00, 2.0296e+00, -2.6796e+00,

-6.9195e+00, -2.3386e+00, -1.9916e+00, -3.0450e+00, 2.4890e+00,

7.3247e+00, 1.3364e+00, 2.3828e-01, 8.4388e-02, 3.1480e+00,

-1.1128e+00, -3.5598e+00, -1.2115e-01, -2.0357e+00, -3.2731e+00,

-7.7205e+00, 4.0948e+00, -2.0732e+00, 2.0833e+00, -2.2803e+00,

-4.9850e+00, 9.7667e+00, 6.1779e+00, -1.0352e+01, -2.2268e+00,

2.5765e+00, -5.7440e+00, 5.5564e+00, -5.2735e+00, 3.0004e+00,

-4.2512e+00, -1.5682e+00, 2.2698e+00, 1.0491e+00, -9.0486e+00,

4.2936e+00, 1.8709e+00, 5.1985e+00, -1.3153e+00, 6.5224e+00,

4.0113e-01, -1.2583e+01, 3.6534e+00, -2.0961e+00, 1.0022e+00,

-1.7873e+00, -4.2555e+00, 7.7471e+00, 1.0173e+00, 3.1626e+00,

2.3558e+00, 3.3589e-01, -4.4178e+00, 5.0584e+00, -2.4118e+00,

-2.7445e+00, 3.4170e+00, -1.1574e+01, -2.6568e+00, -3.6933e+00,

-2.0398e+00, 5.0976e+00, 6.5249e+00, 3.3573e+00, 9.5334e-01,

-9.4430e-01, -9.4395e+00, 2.7867e+00, -1.7549e+00, 1.7287e+00,

3.4942e+00, -1.6883e+00, -3.5771e+00, -1.9013e+00, 2.2239e+00,

-5.4335e+00, -6.5724e+00, -6.7228e-01, -1.9748e+00, -3.1080e+00,

-1.8570e+00, 9.9496e-01, 8.9135e-01, -4.4254e+00, 3.3125e-01,

5.8815e+00, 1.9384e+00, 5.7294e-01, -2.8830e+00, 3.8087e+00,

-1.3095e+00, 5.9208e+00, 3.3620e+00, 3.3571e+00, -3.8807e-01,

9.0022e-01, -5.5742e+00, -4.2939e+00, 1.4992e+00, -4.7080e+00,

-2.9402e+00, -1.2259e+00, 3.0980e-01, 1.8858e+00, -1.9867e+00,

-2.3554e-01, -5.4535e-01, -2.1387e-01, 2.4797e+00, 5.9710e+00,

-7.1249e+00, 1.6257e+00, -1.5241e+00, 7.5974e-01, 1.4312e+00,

2.3641e+00, -3.5566e+00, 9.2066e-01, 4.4934e-01, -1.3233e+00,

3.1733e+00, -4.7059e+00, -1.2090e+01, -3.9241e-01, -6.8457e-01,

-3.6789e+00, 6.6279e+00, -2.9937e+00, -3.8361e+00, 1.3868e+00,

-4.9002e+00, -2.4299e+00, 6.4312e+00, 2.5056e+00, -4.5080e+00,

-5.1278e+00, -1.5585e+00, -3.0226e+00, -8.6811e-01, -1.1538e+00,

-1.0022e+00, -9.1651e-01, -4.7810e-01, -1.6084e+00, -2.7307e+00,

3.7080e+00, 7.7423e-01, -1.1085e+00, -6.8755e-01, -8.2901e+00,

3.2405e+00, -1.6108e-01, -6.2837e-01, -5.5960e+00, -4.4865e+00,

4.0115e-01, -3.7063e+00, -2.1704e+00, 4.0789e+00, -1.7973e+00,

8.9538e+00, 8.9421e-01, -4.8128e+00, 4.5367e+00, -3.2579e-01,

-5.2344e+00, -3.9766e+00, -2.1979e+00, 3.5699e+00, 1.4982e+00,

6.0972e+00, -1.9704e+00, 4.6522e+00, -3.7734e-01, 3.9101e-02,

2.5361e+00, -1.8096e+00, 8.7035e+00, -8.6372e+00, -3.5257e+00,

3.1034e+00, 3.2635e+00, 4.5437e+00, -5.7290e+00, -2.9141e-01,

-2.0011e+00, 8.5328e+00, -4.5064e+00, -4.8276e+00, -1.1786e+01,

3.5607e-01, -5.7115e+00, 6.3122e+00, -3.6650e+00, 3.3597e-01,

2.5017e+00, -3.5025e+00, -3.7891e+00, -3.1343e+00, -1.4429e+00,

-6.9119e+00, -2.6114e+00, -5.9757e-01, 3.7847e-01, 6.3187e+00,

2.8965e+00, -2.5397e+00, 1.8022e+00, 3.5486e+00, 4.4721e+00,

-4.8481e+00, -3.6252e+00, 4.0969e+00, -2.0081e+00, -2.0122e-01,

2.5244e+00, -6.8817e-01, 6.7184e-01, -7.0466e+00, 1.6641e+00,

-2.2308e+00, -3.8960e+00, 6.1320e+00, -8.0335e+00, -1.7130e+00,

2.5688e+00, -5.2547e+00, 6.9845e+00, 2.7835e-01, -6.4554e+00,

-2.1327e+00, -5.6515e+00, 1.1174e+01, -8.0568e+00, 5.7985e+00],

dtype=float32)

nlp1 = nlp(X_train.iloc[0])

nlp2 = nlp(X_train.iloc[1])

nlp1.similarity(nlp1)

1.0

nlp1.similarity(nlp2)

0.871706511976592

nlp1

I you want to discover how the humans lived in Ice Ages come to this restaurant. Expensive, bad quality, good service. Choose another near, there are hundreds.

nlp2

But be warned, it is very much a tourist trap and overpriced. Look around the nearby streets and you'll find a much more authentic experience with much more reasonable prices for tapas, sweets and drinks. It is what it is though- so some people will enjoy the loveliness and convenience and it is a lovely space.

24.6.1. Limitations and More Powerful Alternatives#

While Word2Vec is a powerful tool, it has limitations. One significant issue is that Word2Vec assigns one vector per word, which poses a problem for words with multiple meanings based on their context (homonyms and polysemes, such as “apple” the fruit vs. “apple” the company).

A more fundamental limitation of Word2Vec and similar algorithms lies in the underlying bag-of-words approach, which removes information related to the order of words. Even constructs like n-grams can only compensate for extremely local patterns, such as differentiating “do not like” from “do like”.

In contrast, deep learning techniques like recurrent neural networks and, more powerfully, transformers, can learn patterns across many more words. Transformers, in particular, can learn patterns across entire pages of text [Vaswani et al., 2017], enabling models like ChatGPT and other large language models to use natural language with unprecedented subtlety. Models such as BERT (Bidirectional Encoder Representations from Transformers) [Devlin et al., 2018] and GPT (Generative Pretrained Transformer) [Radford et al., 2019] produce contextualized representations of words within a given context, taking the entire sentence or paragraph into account rather than generating static word embeddings. Working in Python also allows you to try and test many different transformer models, for instance via huggingface [Wolf et al., 2019].

In conclusion, while TF-IDF and n-grams offer a solid start, word embeddings like those produced by Word2Vec and contextualized representations from transformers provide more advanced methods for working with text by considering context and semantic meaning.

24.7. More on NLP#

It should come as no surprise that there is much more to learn about NLP than what was presented in this, and the previous chapters.

Very good starting points for going deeper are:

The book “Speech and Language Processing” by Jurafsky and Martin [Jurafsky and Martin, 2024], see link to the book.

“Natural language processing with transformers” by Tunstall, von Werra, and Wolf [Tunstall et al., 2022]

Another free online course with Python code material: nlp planet.