11. Correlation Analysis#

Correlation analysis is a fundamental statistical tool used to study the relationship between two or more features. The term “correlation” originates from the Latin correlatio, meaning a mutual relationship. In the realm of statistics, correlation is specifically employed as a “measure of association,” which quantifies the strength and direction of a relationship between two variables.

Understanding correlation is essential in data science as it helps in predicting one variable based on the presence of another, determining the association between variables, and in feature selection during the modeling phase. Correlations are also often used as a starting point for a deeper understanding for a deeper, causal understanding of a certain system (we will later see that correlation and causation are very clearly not the same!).

In everyday life, we say that something correlates when different events or measurements align very well. Or we refer to things such as co-incidence. In the following, however, we are looking for a metric that can numerically describe whether a correlation exists and, if so, how pronounced it is.

11.1. Covariance, (Pearson) Correlation Coefficient#

Covariance

A first option for measuring correlation is through variance, which measures the spread of individual data points around the mean. Expanding from variance, we encounter covariance, a measure that extends the idea to two variables. Covariance quantifies how much two variables change together, but its value is scale-dependent, making it difficult to interpret directly.

The formula for covariance between two variables X and Y is given by:

where \(x_i\) and \(y_i\) are the individual values and \(\overline{X}\) and \(\overline{Y}\) are the means of \(X\) and \(Y\), respectively.

Pearson Correlation Coefficient

To address the limitations of covariance, the Pearson Correlation Coefficient offers a normalized measure of correlation. This coefficient, often simply referred to as the correlation coefficient, is a dimensionless number between -1 and 1. It not only shows the strength but also the direction of the linear relationship between two variables.

The Pearson Correlation Coefficient is calculated as:

This formula normalizes the covariance by the product of the standard deviations of the variables, thus providing a clearer, scale-independent measure of their relationship.

Now let’s have a hands-on look at some examples of correlation, and how they can be quantified.

Show code cell source

import os

import pandas as pd

import numpy as np

from matplotlib import pyplot as plt

import seaborn as sb

# Set the ggplot style (optional)

plt.style.use("ggplot")

Show code cell source

# Create some toy data

# Random number generator

rng = np.random.default_rng(seed=0)

# Genereated data

a = np.arange(0, 50)

b = a + rng.integers(-10, 10, 50)

c = a + rng.integers(-20, 25, 50)

d = rng.integers(0, 50, 50)

corr_data = pd.DataFrame({"a": a,

"b": b,

"c": c,

"d": d})

corr_data.head(3)

| a | b | c | d | |

|---|---|---|---|---|

| 0 | 0 | 7 | 14 | 20 |

| 1 | 1 | 3 | -2 | 39 |

| 2 | 2 | 2 | 2 | 15 |

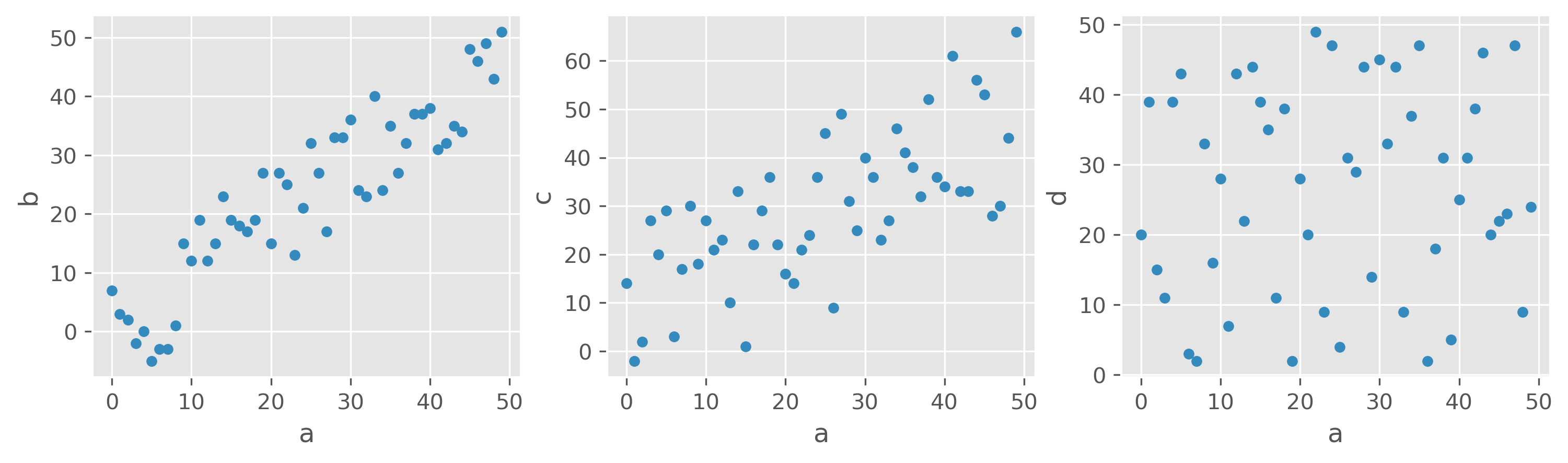

We created some toy data with four features (a, b, c, d). One way to look for interesting relationships between different features is simply to plot one variable (or feature) against another:

Show code cell source

fig, (ax1, ax2, ax3) = plt.subplots(1, 3, figsize=(12,3), dpi=300)

corr_data.plot(kind="scatter", x="a", y="b", ax=ax1)

corr_data.plot(kind="scatter", x="a", y="c", ax=ax2)

corr_data.plot(kind="scatter", x="a", y="d", ax=ax3)

plt.show()

What would you say, which features are correlated, and which are not?

Here, different variables are plotted against each other, a-b, a-c, and a-d. I guess most will agree that the first and second plots show a certain degree of correlation. First, between a and b, and in the second panel between a and c. The third panel shows two features that apparently are entirely unrelated in their behavior. Another thing we can see in those examples is that we should be able to measure different degrees or strengths of a correlation.

We can see the correlation in a<->b. The Pearson Correlation Coefficient measures this type of correlation very well. For this example, the values are:

Show code cell source

print(f"Corr(a, b) = {np.corrcoef(corr_data.a, corr_data.b)[1, 0]:.2f}")

print(f"Corr(a, c) = {np.corrcoef(corr_data.a, corr_data.c)[1, 0]:.2f}")

print(f"Corr(a, d) = {np.corrcoef(corr_data.a, corr_data.d)[1, 0]:.2f}")

Corr(a, b) = 0.91

Corr(a, c) = 0.70

Corr(a, d) = 0.07

We would thus see a strong correlation between a and b and a moderate correlation between a and c. However, there is no noticeable correlation between a and d.

The correlation coefficient allows us to quickly identify significant correlations in the data. For datasets with many variables (or features), we can easily determine the correlations for all combinations of the variables, resulting in what is called a correlation matrix.

11.2. Correlation Matrix#

The correlation matrix for data with the variables a, b, and c would thus be a matrix that contains the Pearson Correlation Coefficients for all possible combinations, i.e.:

a |

b |

c |

|

|---|---|---|---|

a |

\(Corr(a, a)\) |

\(Corr(b, a)\) |

\(Corr(c, a)\) |

b |

\(Corr(a, b)\) |

\(Corr(b, b)\) |

\(Corr(c, b)\) |

c |

\(Corr(a, c)\) |

\(Corr(b, c)\) |

\(Corr(c, c)\) |

The diagonal is trivial and always equals 1. The actual points of interest are the particularly high and low values off the diagonal, as they indicate strong correlations or anti-correlations (with a negative sign).

Using Pandas we can very easily compute the Pearson correlations between all possible pairs of numerical features in a dataset:

corr_data.corr()

| a | b | c | d | |

|---|---|---|---|---|

| a | 1.000000 | 0.913443 | 0.697268 | 0.073561 |

| b | 0.913443 | 1.000000 | 0.594195 | 0.063557 |

| c | 0.697268 | 0.594195 | 1.000000 | 0.071658 |

| d | 0.073561 | 0.063557 | 0.071658 | 1.000000 |

More specific (generated) example

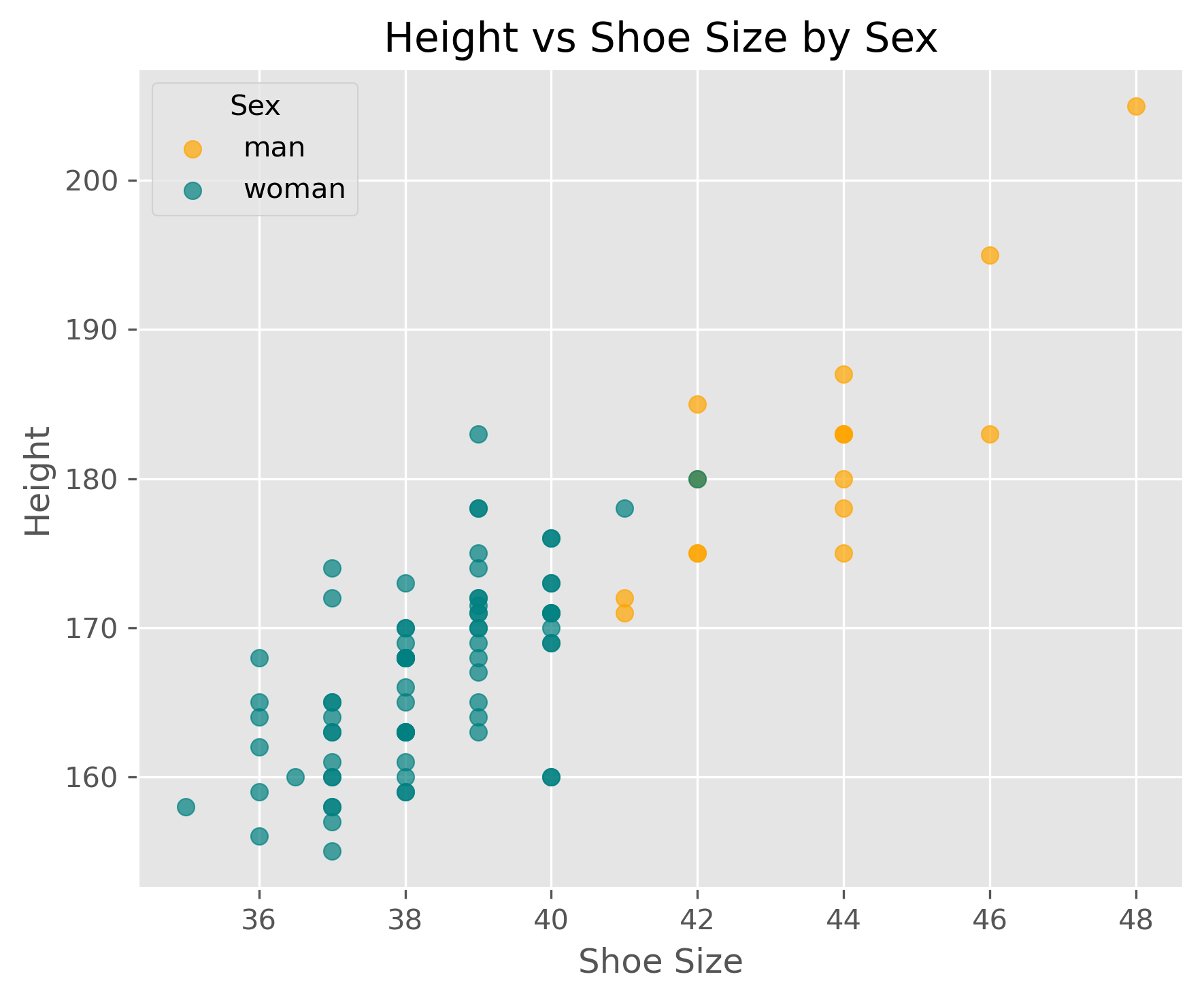

In the following, we continue to work with generated data, but now data that is at least somewhat realistic. We want to see if body measures show any interesting correlations, and we start with (fake) data containing shoe size and height of people.

Show code cell source

filename = r"https://raw.githubusercontent.com/florian-huber/data_science_course/main/datasets/wo_men.csv"

data_people = pd.read_csv(filename)

data_people.head()

| time | sex | height | shoe_size | age | eye_color | weight | blood_pressure | resting_pulse | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 04.10.2016 17:58:51 | woman | 160.0 | 40.0 | 67 | blue | 75.7 | 113.8 | 63 |

| 1 | 04.10.2016 17:58:59 | woman | 171.0 | 39.0 | 21 | brown | 50.2 | 98.9 | 67 |

| 2 | 04.10.2016 18:00:15 | woman | 174.0 | 39.0 | 46 | brown | 97.2 | 118.3 | 75 |

| 3 | 04.10.2016 18:01:17 | woman | 176.0 | 40.0 | 33 | brown | 91.1 | 100.4 | 79 |

| 4 | 04.10.2016 18:01:22 | man | 195.0 | 46.0 | 54 | blue | 67.0 | 143.5 | 67 |

As it was introduced in the previous sections, we would often do a quick first inspection of the data using simple statistical measures. In Pandas this is very easy to do:

data_people.describe()

| height | shoe_size | age | weight | blood_pressure | resting_pulse | |

|---|---|---|---|---|---|---|

| count | 100.000000 | 100.00000 | 101.000000 | 101.000000 | 101.000000 | 101.000000 |

| mean | 165.233800 | 39.77500 | 41.673267 | 77.370297 | 119.494059 | 70.722772 |

| std | 39.817544 | 5.55613 | 16.067426 | 17.321533 | 10.021515 | 5.841436 |

| min | 1.630000 | 35.00000 | 16.000000 | 47.700000 | 95.600000 | 57.000000 |

| 25% | 163.000000 | 38.00000 | 28.000000 | 61.700000 | 113.800000 | 67.000000 |

| 50% | 168.500000 | 39.00000 | 38.000000 | 77.700000 | 119.700000 | 71.000000 |

| 75% | 174.250000 | 40.00000 | 56.000000 | 93.700000 | 125.800000 | 74.000000 |

| max | 364.000000 | 88.00000 | 71.000000 | 105.300000 | 146.500000 | 87.000000 |

Basic cleaning or processing

In the table above, some things seem weird!

The minimum height is 1.63, and the maximum height is 364.0. Something is not correct here. Maybe someone added some fantasy figures to the dataset, one of them apparently with a shoe size of 88. These are typical issues we can quickly discover in a first inspection. We then have to decide what to do about it. Here, we simply decide to keep data only within more or less realistic boundaries.

mask = (data_people["shoe_size"] < 50) & (data_people["height"] > 100) & (data_people["height"] < 250)

data_people = data_people[mask]

We can then move on to computing the correlations:

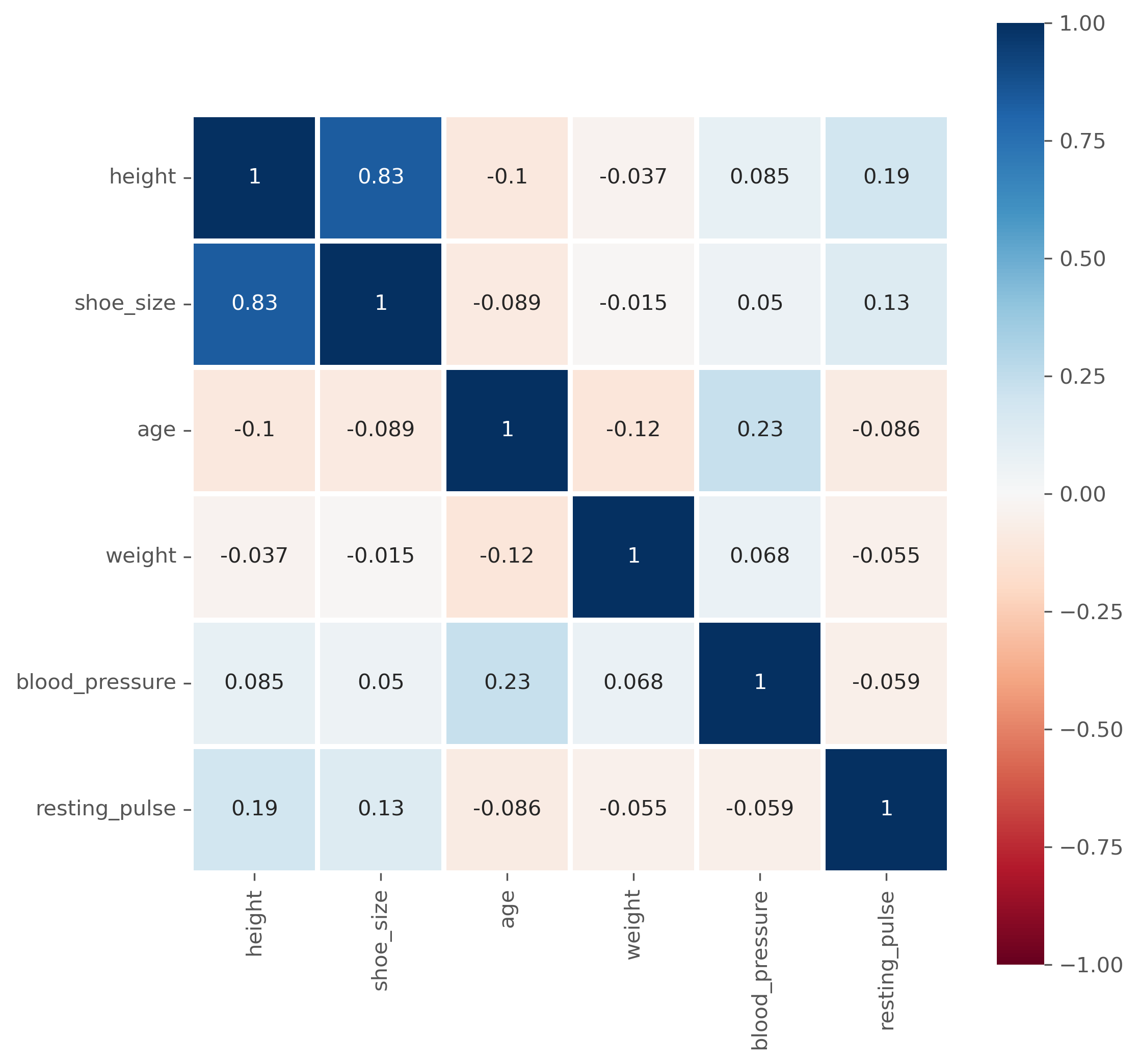

data_people.corr(numeric_only=True)

| height | shoe_size | age | weight | blood_pressure | resting_pulse | |

|---|---|---|---|---|---|---|

| height | 1.000000 | 0.834646 | -0.104061 | -0.036888 | 0.085438 | 0.188240 |

| shoe_size | 0.834646 | 1.000000 | -0.089209 | -0.014565 | 0.049667 | 0.133353 |

| age | -0.104061 | -0.089209 | 1.000000 | -0.120862 | 0.233381 | -0.085840 |

| weight | -0.036888 | -0.014565 | -0.120862 | 1.000000 | 0.067986 | -0.054658 |

| blood_pressure | 0.085438 | 0.049667 | 0.233381 | 0.067986 | 1.000000 | -0.058540 |

| resting_pulse | 0.188240 | 0.133353 | -0.085840 | -0.054658 | -0.058540 | 1.000000 |

Question: What does that actually mean?

Instead of as a matrix with values, the correlation matrix is often also graphically represented, especially for larger datasets, to easily spot particularly high and low coefficients.

fig, ax = plt.subplots(figsize=(8, 8), dpi=300)

sb.heatmap(

data_people.corr(numeric_only=True), # this is required for pandas >= 2.0, but also generally a good idea.

vmin=-1, vmax=1,

square=True, lw=2,

annot=True, cmap="RdBu",

ax=ax,

)

plt.show()

11.3. Limitations of the (Pearson) Correlation Measure#

Pronounced high (or low) correlation coefficients indicate actual correlations in the data, which -in the case of the Pearson correlation- usually means that there is a clear linear dependency between two features.

This approach, however, has several limitations that can complicate the interpretation of such correlation measures. In the following, the most common pitfalls will be presented.

The value of the slope has no influence

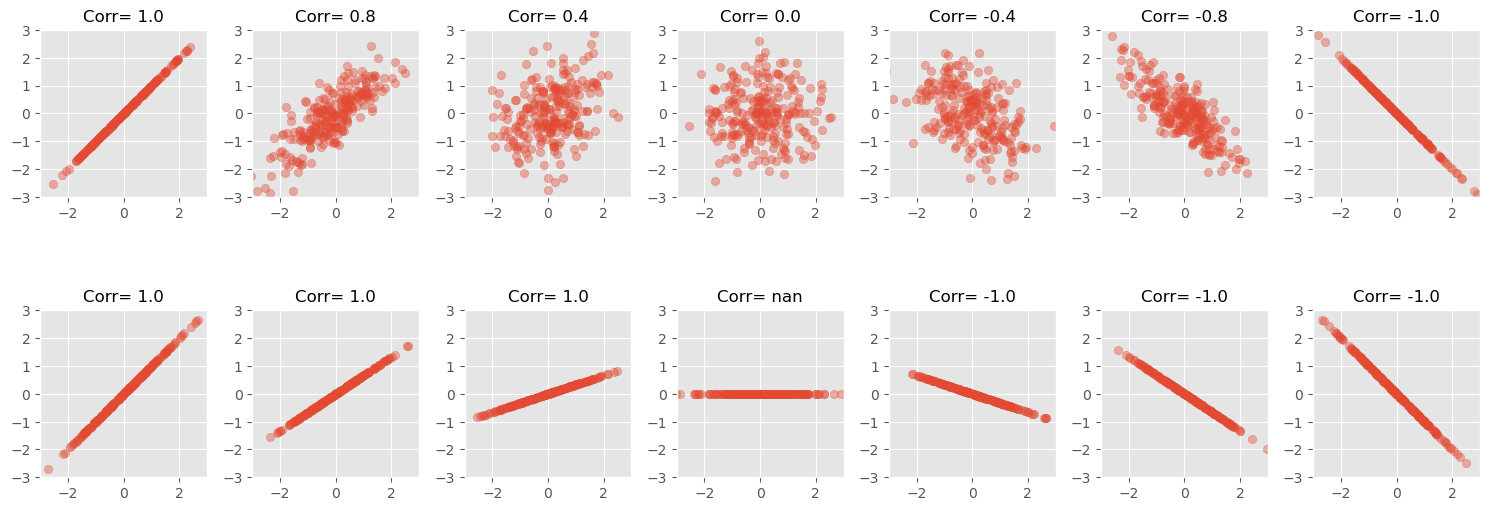

The strength of a correlation is a measure of how clear the linear dependency between two features is. But the result is not dependent on the slope of such a relation between two values (only on the sign of it). See the plot below:

Show code cell source

NUM_POINTS = 250

# Seed for reproducibility

np.random.seed(0)

def scatter_plot(ax, x, y):

""" Create a simple scatter plot."""

ax.scatter(x, y, alpha=0.4)

# Set aspect to equal for better comparison

ax.set_aspect('equal')

ax.set_xlim([-3, 3])

ax.set_ylim([-3, 3])

def scatter_corr(ax, corr_coef, num_points=NUM_POINTS):

""" A function to create scatter plots with different correlation coefficients."""

x = np.random.normal(0, 1, num_points)

y = x * corr_coef + np.random.normal(0, np.sqrt(1 - corr_coef**2), num_points)

scatter_plot(ax, x, y)

ax.set_title(f"Corr= {corr_coef:.1f}", fontsize=12)

def scatter_linear(ax, slope, num_points=NUM_POINTS):

""" A function to create scatter plots with different correlation coefficients."""

x = np.random.normal(0, 1, num_points)

y = x * slope

scatter_plot(ax, x, y)

corr_coef = np.corrcoef(x, y)[1, 0]

ax.set_title(f"Corr= {corr_coef:.1f}", fontsize=12)

# Loop through the correlation coefficients, creating two scatter plots for each

correlation_coefficients = [1, 0.8, 0.4, 0, -0.4, -0.8, -1]

slopes = [1, 2/3, 1/3, 0, -1/3, -2/3, -1]

fig, axes = plt.subplots(2, len(correlation_coefficients), figsize=(15, 6))

for i, coef in enumerate(correlation_coefficients):

scatter_corr(axes[0, i], coef)

for i, slope in enumerate(slopes):

scatter_linear(axes[1, i], slope)

fig.tight_layout()

plt.show()

/home/runner/micromamba/envs/data_science/lib/python3.12/site-packages/numpy/lib/function_base.py:2897: RuntimeWarning: invalid value encountered in divide

c /= stddev[:, None]

/home/runner/micromamba/envs/data_science/lib/python3.12/site-packages/numpy/lib/function_base.py:2898: RuntimeWarning: invalid value encountered in divide

c /= stddev[None, :]

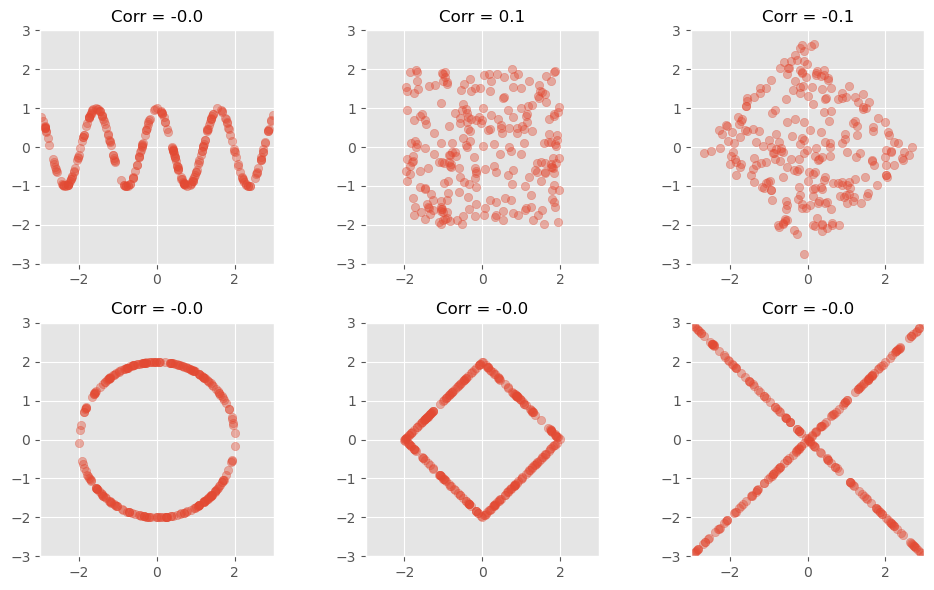

(Pearson) correlation coefficients only detects linear relationships

While pronounced correlation coefficients reflect a clear dependency between values, a low correlation coefficient is more complicated to interpret. Most importantly, a low correlation does not imply that there is no significant relationship!

The following figure shows data with clearly visible pattern (to the human eye), but with no, or nearly no detectable Pearson correlation.

Show code cell source

NUM_POINTS = 250

# Seed for reproducibility

np.random.seed(0)

# Define a function to create scatter plots with non-linear relationships

def scatter_nonlinear(ax, pattern):

# Generate x values

x = np.random.uniform(-3, 3, NUM_POINTS)

if pattern == 'wave':

y = np.cos(x*4)

elif pattern == 'square':

y = np.random.uniform(-3, 3, NUM_POINTS)

x *= 2/3

y *= 2/3

elif pattern == 'square_rotated':

y = np.random.uniform(-3, 3, NUM_POINTS)

points = np.vstack([2/3*x, 2/3*y])

theta = np.radians(45)

c, s = np.cos(theta), np.sin(theta)

rotation_matrix = np.array([[c, -s], [s, c]])

rotated_points = rotation_matrix.dot(points)

(x, y) = rotated_points

elif pattern == 'circle':

x = np.random.uniform(-1, 1, NUM_POINTS)

y = np.sqrt(1 - x**2) * np.random.choice([1, -1], NUM_POINTS)

x *= 2

y *= 2

elif pattern == 'diamond':

y = np.random.uniform(-3, 3, NUM_POINTS)

y = np.sign(y) * (3 - np.abs(x))

x *= 2/3

y *= 2/3

elif pattern == 'x':

y = np.abs(x) * np.random.choice([1, -1], NUM_POINTS)

# Create scatter plot

scatter_plot(ax, x, y)

corr_coef = np.corrcoef(x, y)[1, 0]

ax.set_title(f"Corr = {corr_coef:.1f}", fontsize=12)

# Define the patterns to be visualized

patterns1 = ['wave', 'square', 'square_rotated']

patterns2 = ['circle', 'diamond', 'x']

fig, axes = plt.subplots(2, 3, figsize=(10, 6))

for i, pattern in enumerate(patterns1):

scatter_nonlinear(axes[0, i], pattern)

for i, pattern in enumerate(patterns2):

scatter_nonlinear(axes[1, i], pattern)

fig.tight_layout()

plt.show()

Another prominent example to illustrate how little the Pearson correlation coefficient sometimes tells us is the datasaurus dataset from the last chapter. Here, too, the data is distributed very differently, but the correlation coefficient remains the same.

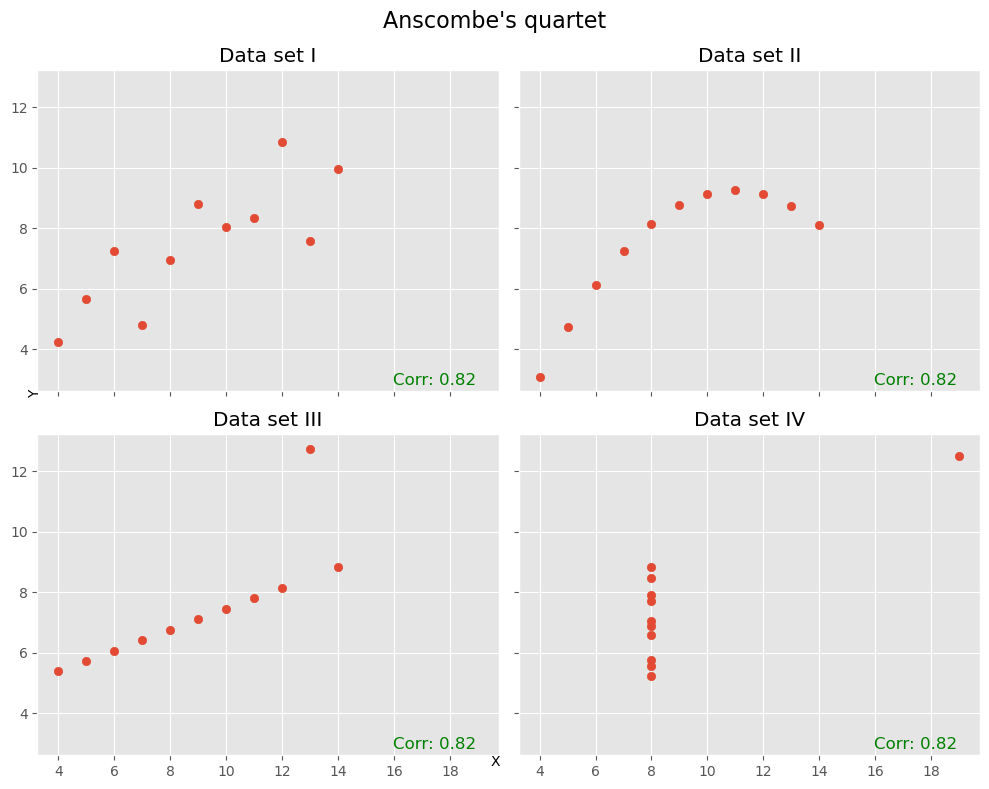

Correlation coefficient is very sensitive to outliers

Another well-known illustrative example for the limitations of the correlation coefficient is “Anscombe’s quartet [Anscombe, 1973]. This includes the already mentioned restriction to mostly linear relationships. But it also demonstrates nicely, that the correlation coefficient can change drastically due to one or few outliers in our data as can be seen in the following plots:

Show code cell source

# Defining the Anscombe's quartet dataset

anscombe_data = {

'I': {

'x': [10.0, 8.0, 13.0, 9.0, 11.0, 14.0, 6.0, 4.0, 12.0, 7.0, 5.0],

'y': [8.04, 6.95, 7.58, 8.81, 8.33, 9.96, 7.24, 4.26, 10.84, 4.82, 5.68]

},

'II': {

'x': [10.0, 8.0, 13.0, 9.0, 11.0, 14.0, 6.0, 4.0, 12.0, 7.0, 5.0],

'y': [9.14, 8.14, 8.74, 8.77, 9.26, 8.10, 6.13, 3.10, 9.13, 7.26, 4.74]

},

'III': {

'x': [10.0, 8.0, 13.0, 9.0, 11.0, 14.0, 6.0, 4.0, 12.0, 7.0, 5.0],

'y': [7.46, 6.77, 12.74, 7.11, 7.81, 8.84, 6.08, 5.39, 8.15, 6.42, 5.73]

},

'IV': {

'x': [8.0, 8.0, 8.0, 8.0, 8.0, 8.0, 8.0, 19.0, 8.0, 8.0, 8.0],

'y': [6.58, 5.76, 7.71, 8.84, 8.47, 7.04, 5.25, 12.50, 5.56, 7.91, 6.89]

}

}

# Plotting the Anscombe's quartet

fig, axs = plt.subplots(2, 2, figsize=(10, 8), sharex=True, sharey=True)

axs = axs.flatten() # To make it easier to iterate over

# Calculating the correlation coefficient for each dataset

correlation_coefficients = []

for i, (key, data) in enumerate(anscombe_data.items()):

axs[i].scatter(data['x'], data['y'])

axs[i].set_title(f"Data set {key}")

corr_coef = np.corrcoef(data['x'], data['y'])[0, 1]

correlation_coefficients.append(corr_coef)

axs[i].text(0.95, 0.01, f'Corr: {corr_coef:.2f}', verticalalignment='bottom', horizontalalignment='right',

transform=axs[i].transAxes, color='green', fontsize=12)

fig.text(0.5, 0.04, 'X', ha='center', va='center')

fig.text(0.04, 0.5, 'Y', ha='center', va='center', rotation='vertical')

plt.suptitle("Anscombe's quartet", fontsize=16)

plt.tight_layout()

plt.show()

In many cases (such as the ones above), Pearson correlation is not enough!

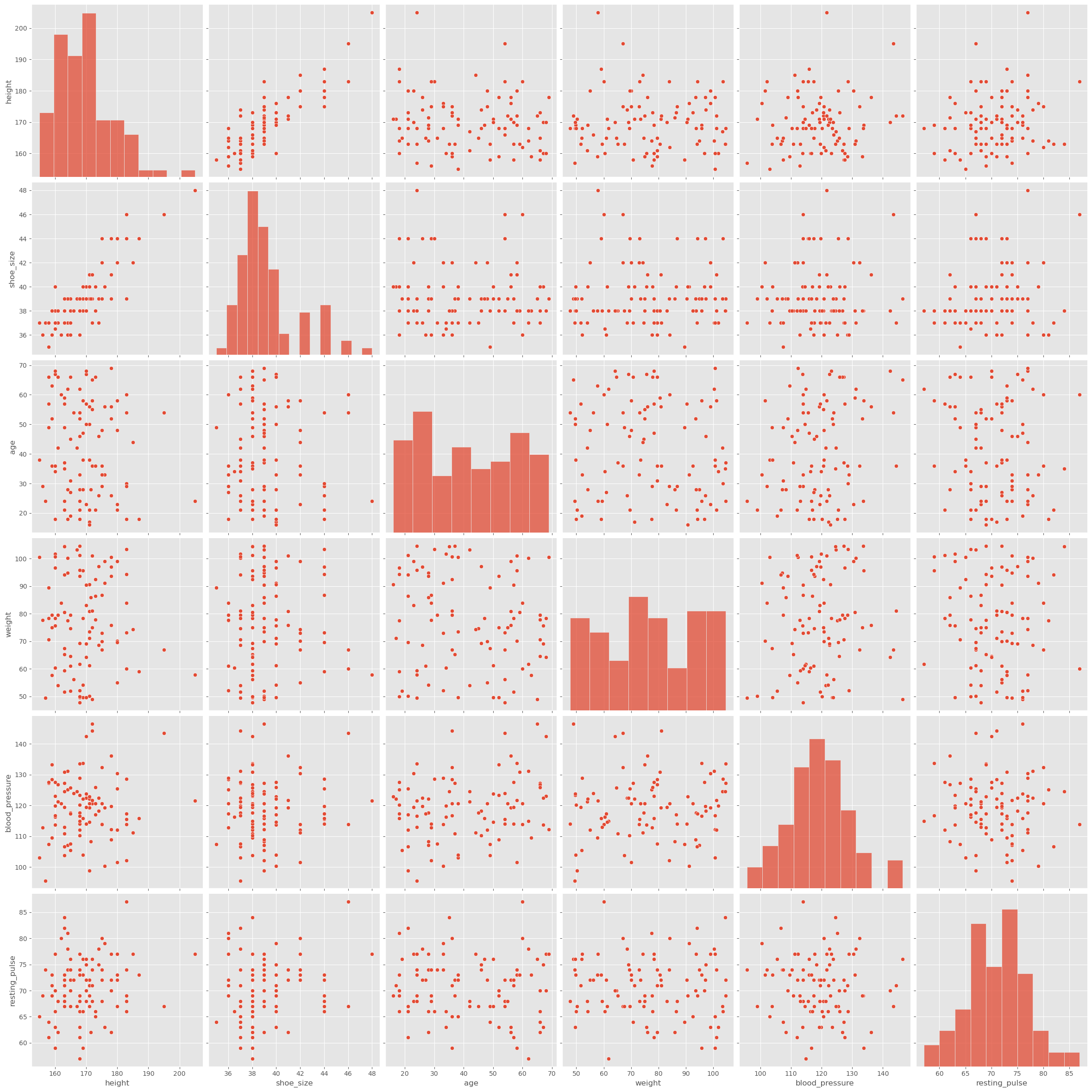

Just like before, with statistical metrics vs. actual distributions, the correlation coefficient sometimes proves to be too rough, and we might want to look more closely at the data. If there aren’t too many variables, this can be done with the pairplot() function from seaborn. We will here do this with the data_people we used further above.

sb.pairplot(data_people, height=4)

plt.show()

It is of course also easily possible to plot only the parts we are most interested in, for instance:

Show code cell source

fig, ax = plt.subplots(figsize=(6, 5), dpi=300)

# plot men

men = data_people[data_people.sex == "man"]

ax.scatter(men.shoe_size, men.height, c="orange", label="man", alpha=0.7)

# plot women

women = data_people[data_people.sex == "woman"]

ax.scatter(women.shoe_size, women.height, c="teal", label="woman", alpha=0.7)

ax.legend(title="Sex")

ax.set_xlabel("Shoe Size")

ax.set_ylabel("Height")

ax.set_title("Height vs Shoe Size by Sex")

plt.tight_layout()

plt.show()

11.4. What Does a Correlation Tell Us?#

If we discover correlations in our data, for example, through high or low Pearson Correlation Coefficients, what can we do with it?

Although high or low coefficients are often the first thing we look for, a value of 1.0 or -1.0 isn’t necessarily a cause for celebration, even though these values describe a maximum correlation.

The reason is simple. If two variables are perfectly correlated, it means one can perfectly describe the other. They do not provide more information than just one of them alone. Moreover, in practice, a correlation of 1.0 (or -1.0) is more likely a result of both variables describing the same thing. Typical examples would be temperature given in both Celsius and Kelvin or the circumference and diameter of wheels. In such cases, correlations mainly tell us which variables can be discarded.

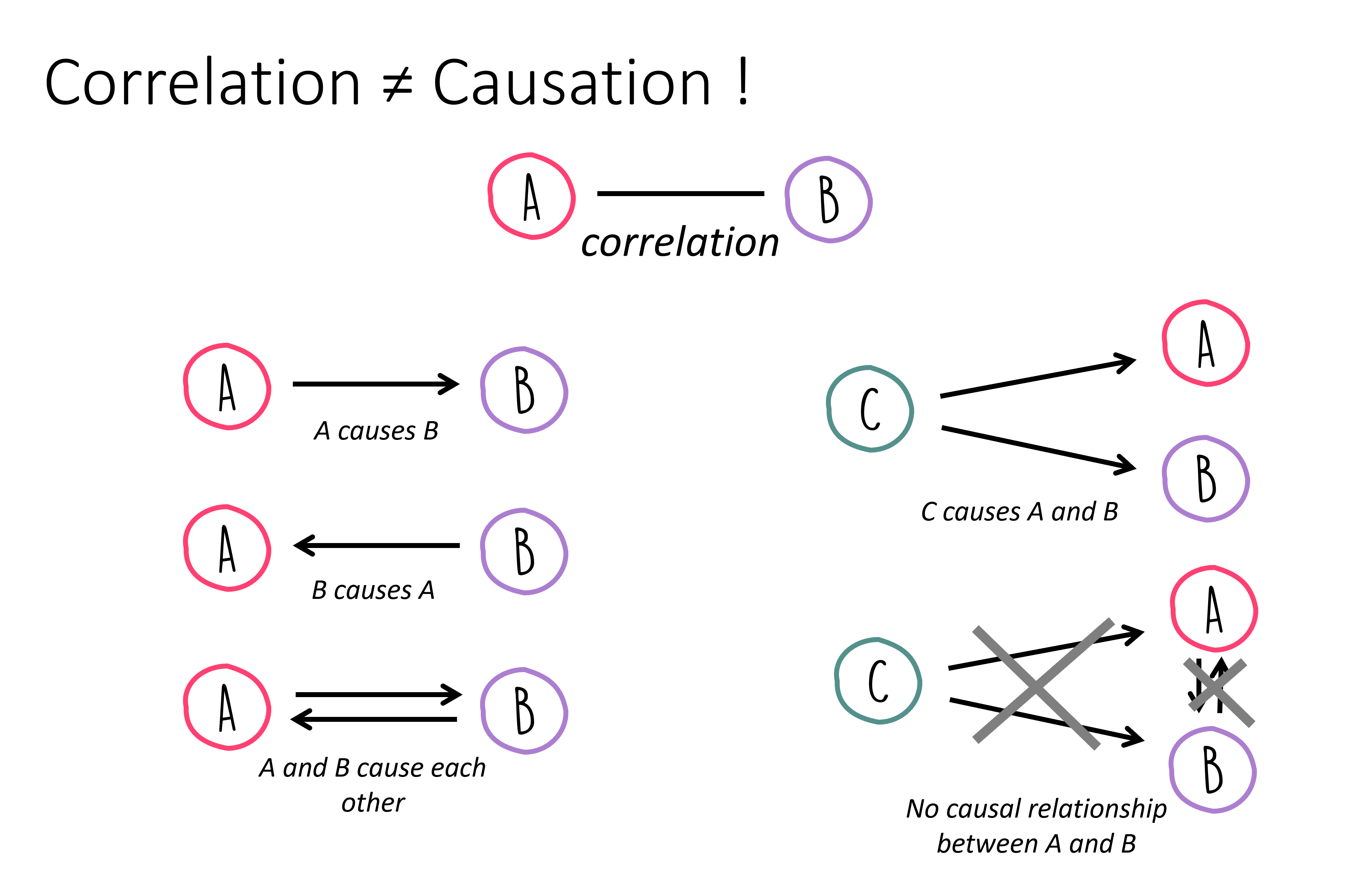

11.5. Correlation vs. Causality#

Searching for correlations within data is often a pivotal step in the data science process. But why? What can we do with a high correlation (that isn’t too high, i.e., equal to 1.0)?

The significance of a discovered correlation entirely depends on the problem posed or the task at hand. In extreme scenarios, two possible outcomes arise:

We’ve Achieved Our Goal! The correlation is all we need. For instance, situations where a connection merely needs to be identified, not necessarily understood. Suppose in an online store we discover that 78% of customers who purchased item X also bought item Y (and this conclusion is supported by a reliable data foundation). This insight would suffice to make relevant suggestions to customers or even reorder items X and Y in tandem.

It’s Just the Beginning! In this case, the correlation is merely an initial hint in the search for deeper relationships. For example, determining if customers purchase item Y because they bought item X. Or, more broadly, if a correlation exists between A and B, whether A is the cause of B. This leads us to the concept of causality…

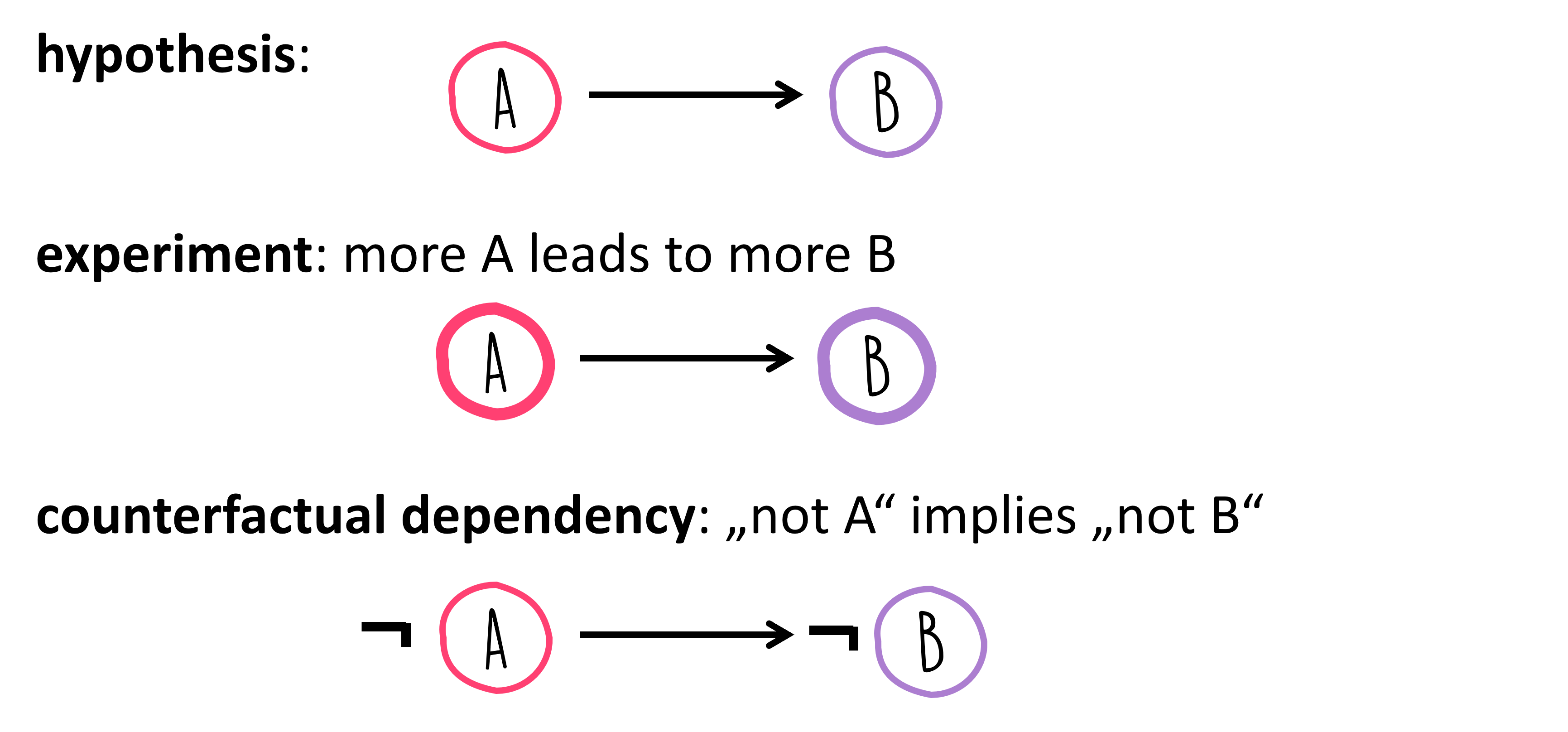

Causality means that if A is the cause for effect B, then B is produced by A. Now, the question arises, how does this differ from correlation?

In daily life, correlation and causality are often confused. This mix-up occurs because it’s inherently human to suspect causal relationships behind observed correlations. Examples of such observations include:

“I ate fruits from tree B and then fell ill.”

“I took medicine X, and an hour later, I felt better.”

In both instances, we’re essentially observing only a correlation, yet we quickly infer a causal link. Without additional information or experiments, a correlation serves at best as a hint for a possible causal relationship. When A correlates with B, this can potentially be explained by various causal relationships:

Things become a tad more complex, as there isn’t one definitive path from identifying a correlation to proving a causal relationship! Often, targeted experiments can help validate or refute hypotheses about causal links. For instance, if eliminating A should result in the absence of B, assuming the causality \(A \rightarrow B\) holds true. However, this only applies if no other underlying cause could “step in” and also trigger B.